DOI PDF Video Slides Press

- MIT News

- 3D Natives

- VoxelMatters

- IndiaAI

- AutoGPT

- 3DPrint.com

- 3D Print and Design

- Design for AM

- 3DPrinting.com

- TechTimes

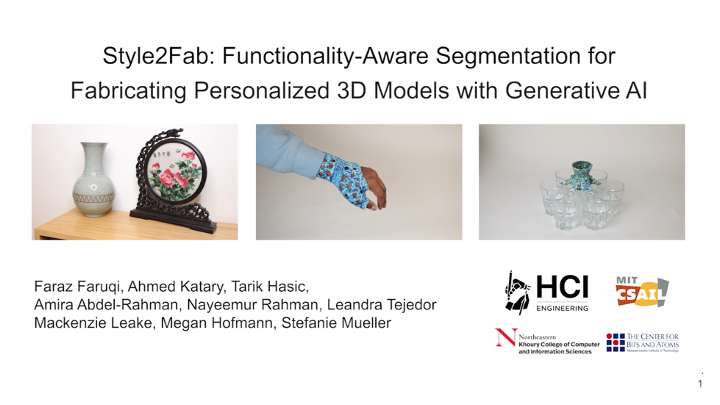

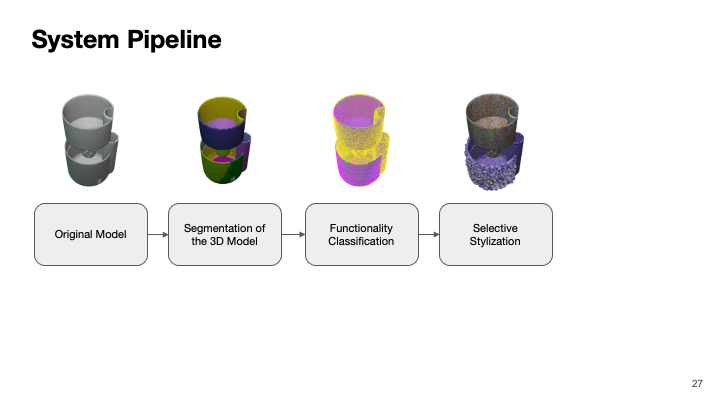

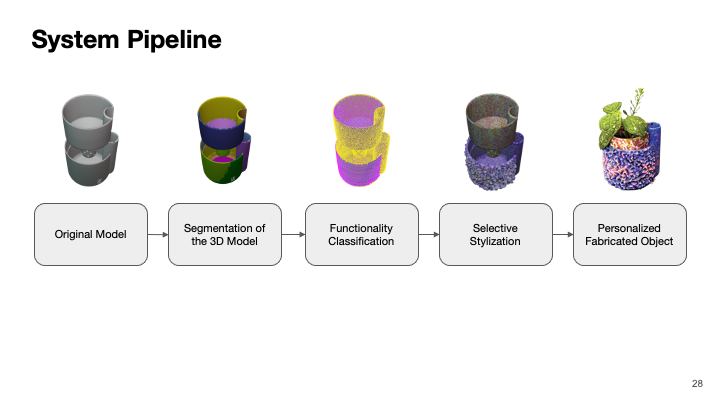

Style2Fab: Functionality-Aware Segmentation for Fabricating Personalized 3D Models with Generative AI

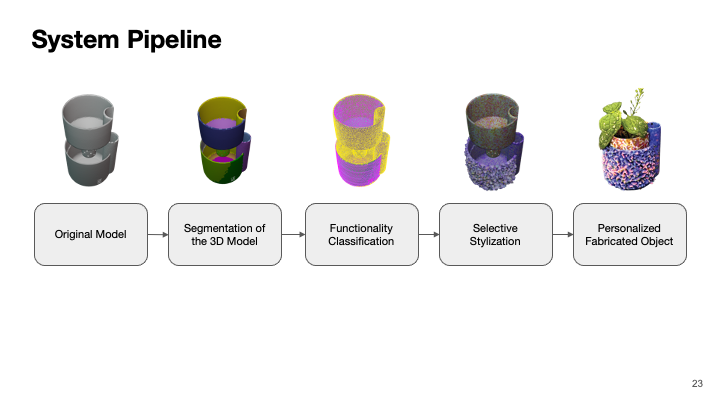

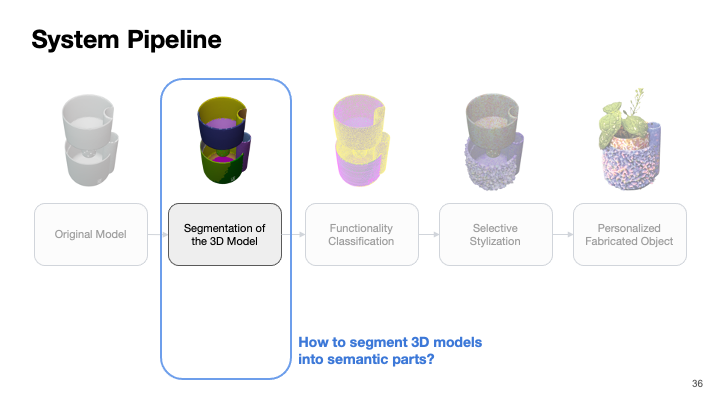

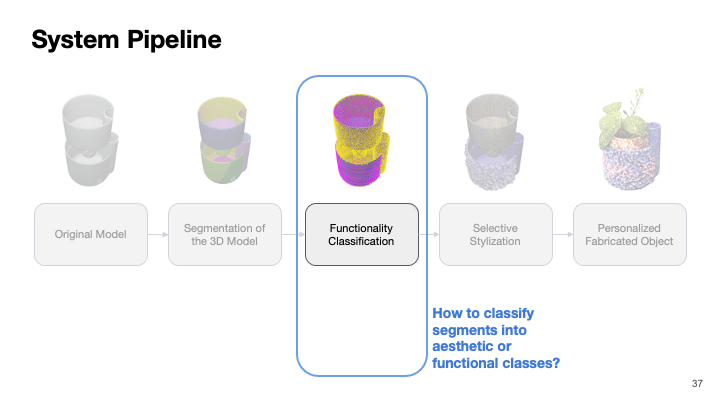

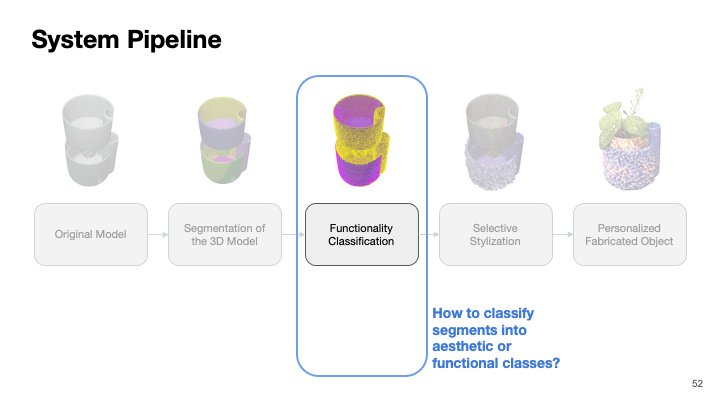

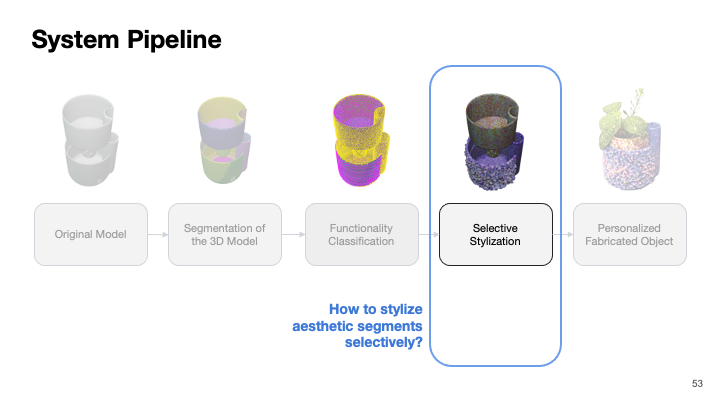

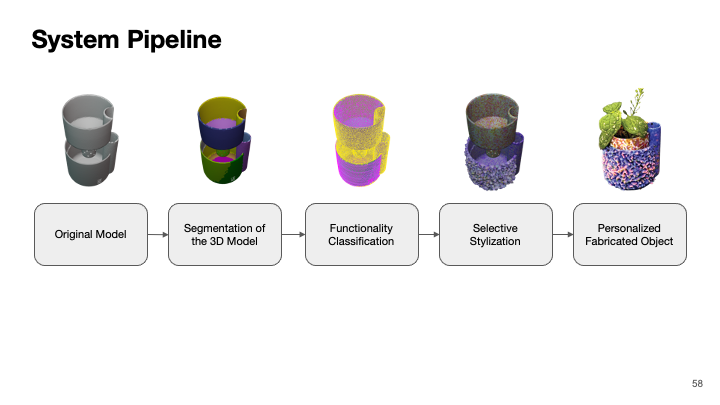

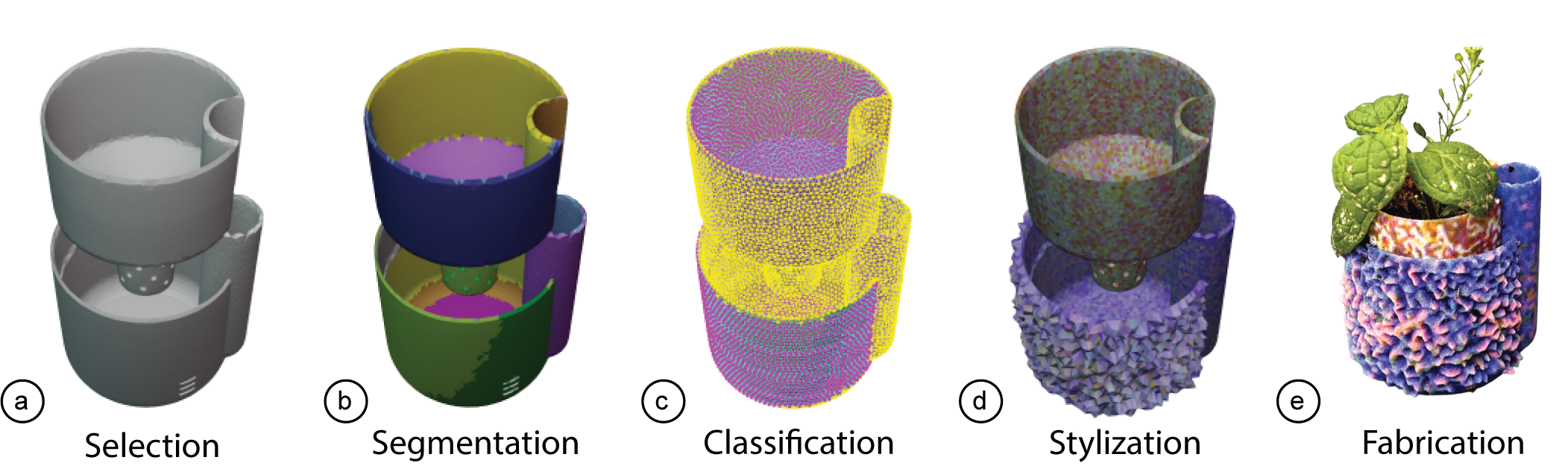

Figure 1. To stylize a 3D model with Generative AI without affecting its functionality a user: (a) selects a model to stylize, (b) segments the model, (c) automatically classifies the aesthetic and functional segments, (d) selectively styles only the aesthetic segments, and

(e) fabricates their stylized model.

ABSTRACT

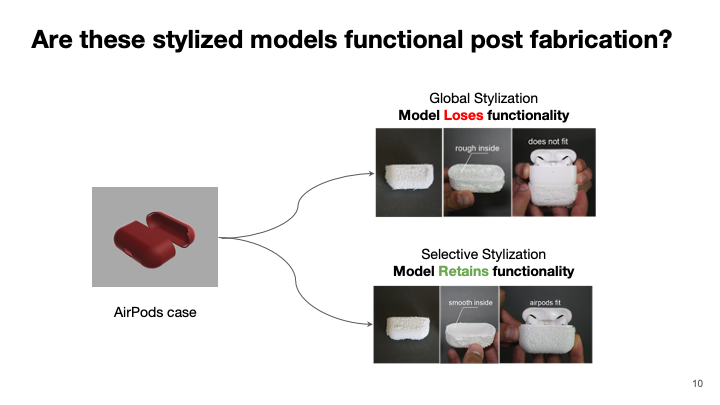

With recent advances in Generative AI, it is becoming easier to automatically manipulate 3D models. However, current methods tend to apply edits to models globally, which risks compromising the intended functionality of the 3D model when fabricated in the physical world. For example, modifying functional segments in 3D models, such as the base of a vase, could break the original functionality of the model, thus causing the vase to fall over. We introduce a method for automatically segmenting 3D models into functional and aesthetic elements. This method allows users to selectively modify aesthetic segments of 3D models, without affecting the functional segments. To develop this method we first create a taxonomy of functionality in 3D models by qualitatively analyzing 1000 models sourced from a popular 3D printing repository, Thingiverse. With this taxonomy, we develop a semi-automatic classification method to decompose 3D models into functional and aesthetic elements. We propose a system called Style2Fab that allows users to selectively stylize 3D models without compromising their functionality. We evaluate the effectiveness of our classification method compared to human-annotated data, and demonstrate the utility of Style2Fab with a user study to show that functionalityaware segmentation helps preserve model functionality.

INTRODUCTION

A key challenge for many makers is modifying or “stylizing” open source designs shared in online repositories (e.g., Thingiverse). While these platforms provide numerous ready-to-print 3D models, customization is limited to changing predefined parameters. While recent advances in deep-learning methods enable aesthetic modifications in 3D models with styles, customizing existing models with these styles presents new challenges. Beyond aesthetics, 3D printed models often have designed functionality that is directly related to geometry. Manipulating an entire 3D model, which can change the whole geometry, may break this functionality. Styles can be selectively applied, but this requires the maker to identify which pieces of a 3D model affect the functionality and which are purely aesthetic — a daunting task for users remixing unfamiliar designs. In some cases, users can label functionality in CAD tools, however, most of the models shared in online repositories are 3D models that have lost this key meta-data.

To help makers make use of emerging AI-based 3D manipulation tools, we present a method that automatically decomposes 3D meshes designed for 3D printing into components based on their functional and aesthetic parts. This method allows makers to selectively stylize 3D models while maintaining the desired original functionality. Derived from a formative study of 1000 designs on the Thingiverse repository, we contribute a taxonomy for classifying geometric components of a 3D mesh as (1) aesthetic, contributing only to model aesthetics; (2) internally-functional, related to assembly of component-based models; or (3) externally-functional, related to an interaction with the environment. Based on this taxonomy, we contribute a topology-based method that can automatically segment 3D meshes, and classify the functionality of those segments into these three categories. To demonstrate this method, we present an interactive tool, “Style2Fab”, that enables makers to manipulate 3D meshes without modifying their functionality. Style2Fab uses differentiable rendering for stylization as proposed in Text2Mesh. Our work demonstrates how we extend these methods to enable complex manipulation of open-source 3D meshes for 3D printing without modifying their original functionality.

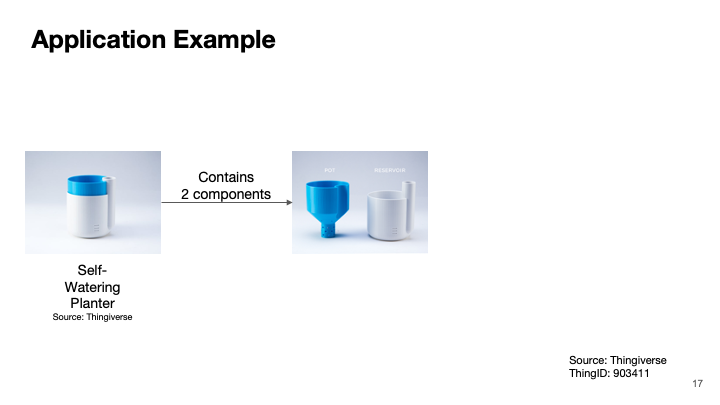

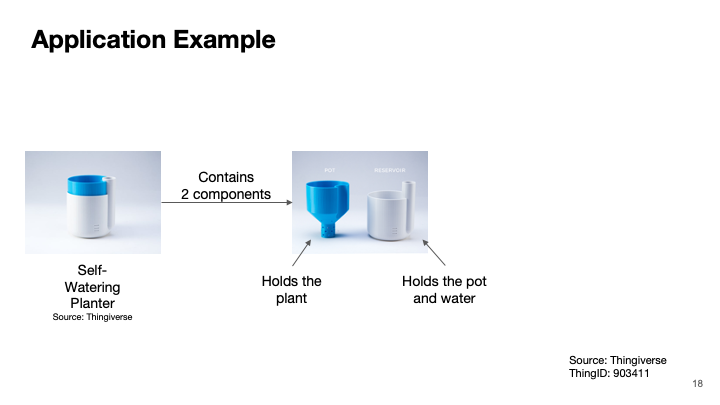

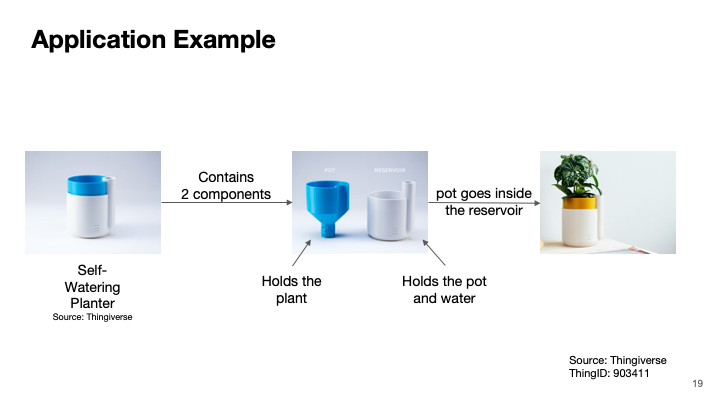

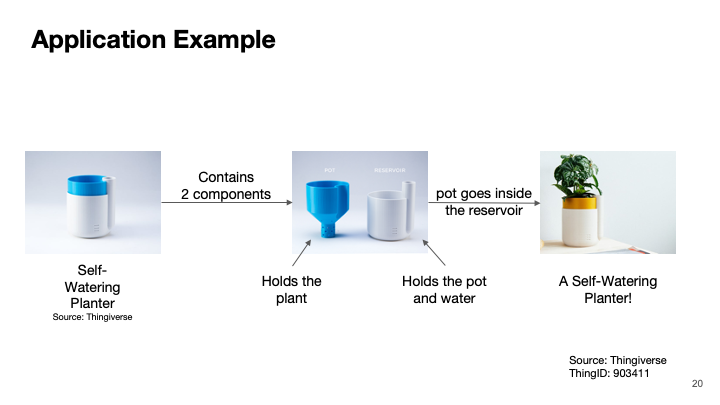

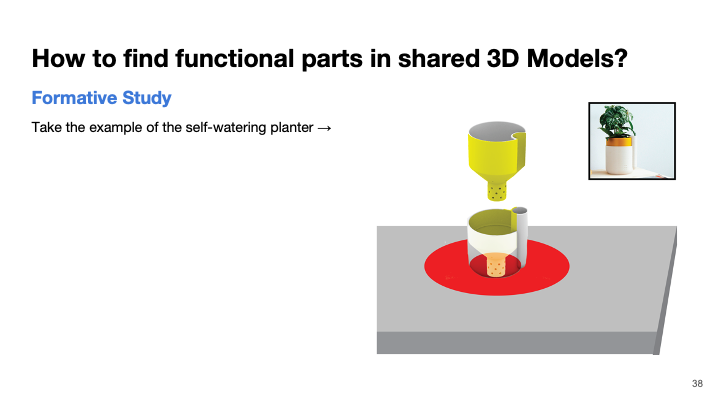

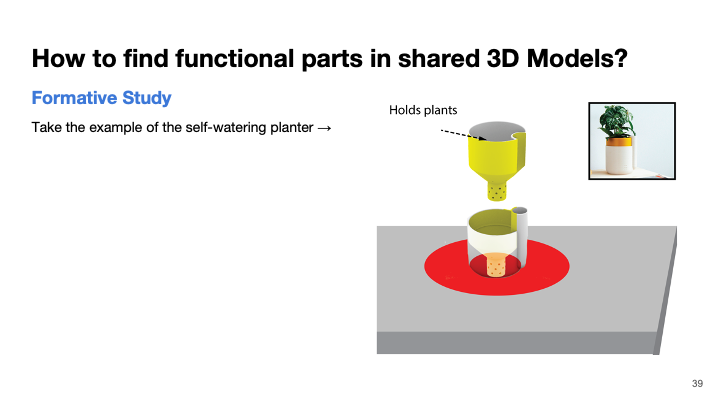

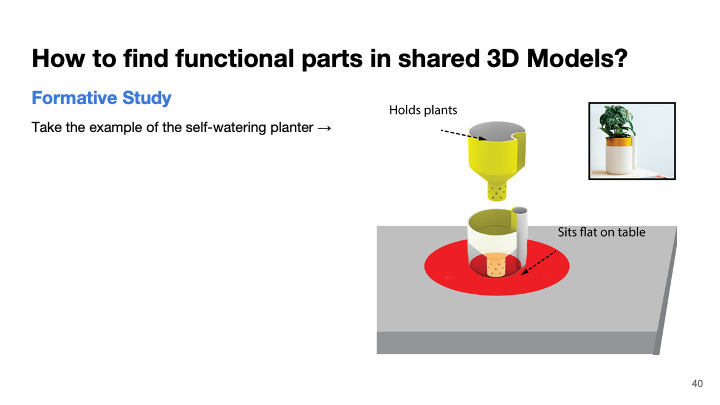

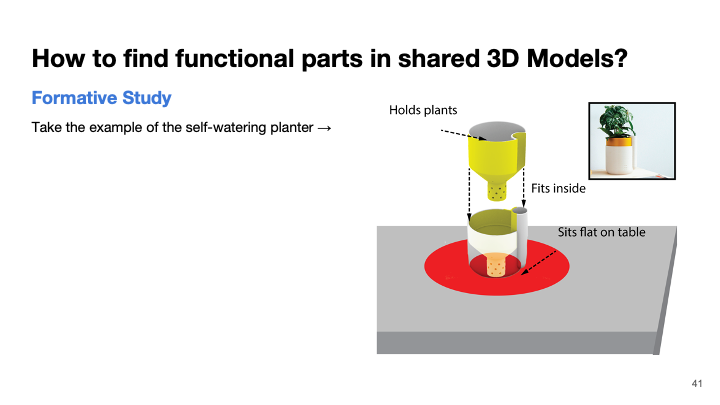

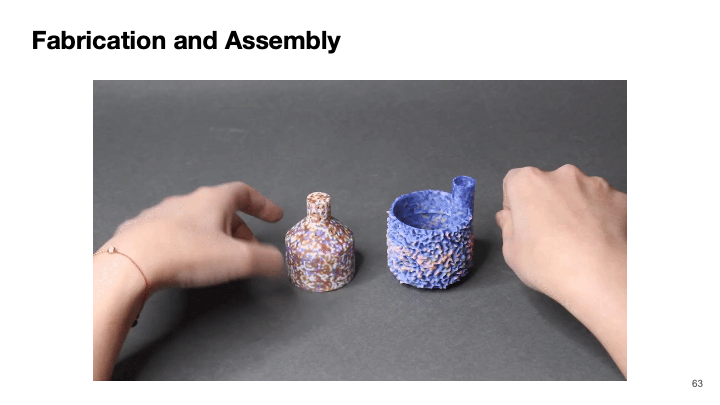

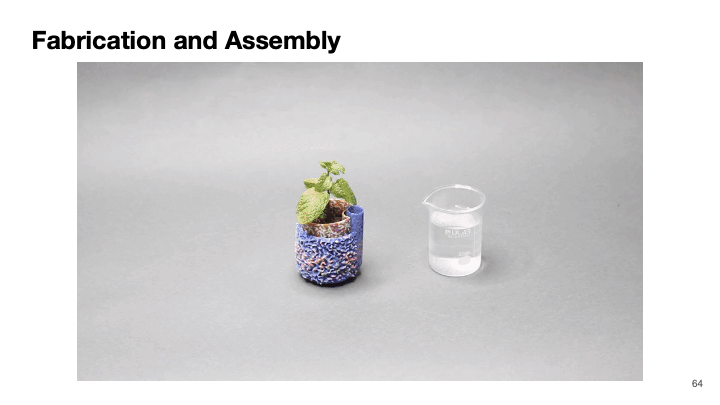

Consider a leading scenario where an inexperienced maker, Alex, wants to stylize the outside of a 3D printable self-watering planter (Figure 1). Conceptually, Alex understands that the base needs to stay flat and the interlocking segments of the two components of the model should remain unchanged. But she does not know how to isolate these regions in the two 3D meshes. She processes the models in Style2Fab, where our functionality-aware segmentation method segments the two models, and labels the base and interlocking segments as functional. Our method has done the work of tediously editing the model for her, allowing her to verify that the model’s functionality was preserved on the segment level. She applies her style only to the outer edge of the planters and sends it off to the 3D printer. She uses the final design to grow herbs on her desk.

In the remaining sections, we present a formative study on 3D model functionality in the context of 3D printed designs shared online. We then present our method for automatically segmenting and classifying the functional components of 3D models. Next, we present the Style2Fab system which uses this segmentation method to help makers stylize 3D printable designs. We use Style2Fab to evaluate if our classification and segmentation method can help makers modify existing designs without breaking their functionality.

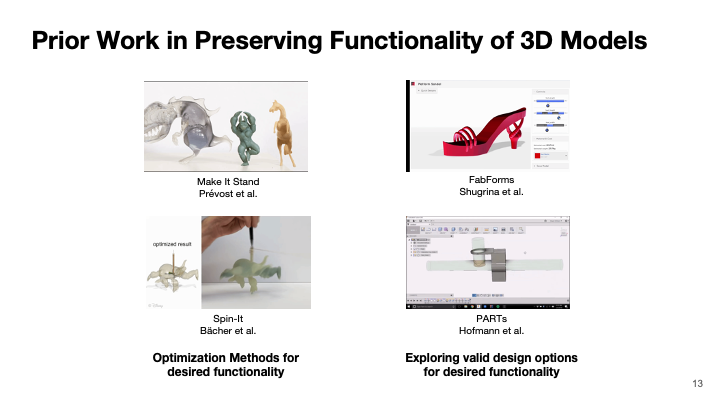

FORMATIVE STUDY

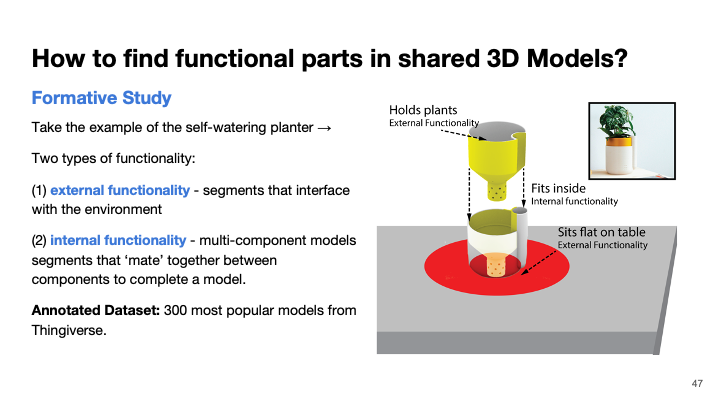

One of the key challenges in modifying 3D printable models is ensuring that they remain functional. This requires a maker to carefully identify which parts of a design contribute to the functionality and which parts contribute only to the model’s aesthetics. The aim of this formative study is to identify functionality descriptors in a wide variety of 3D models. To do this we qualitatively coded 1000 designs sourced from Thingiverse using a similar approach to Hofmann et al. and Chen et al. From these codes, we developed a taxonomy of 3D model functionality.

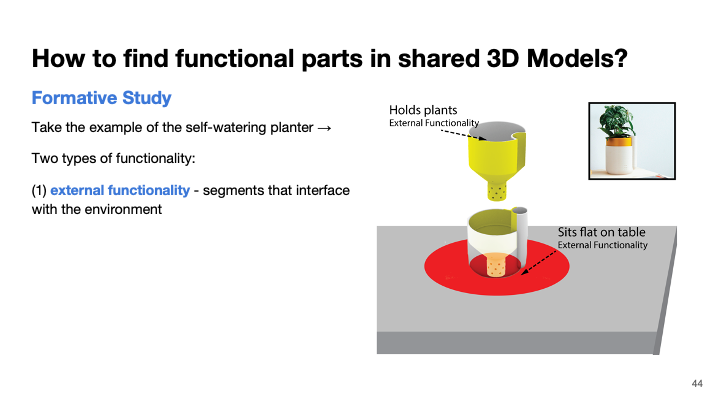

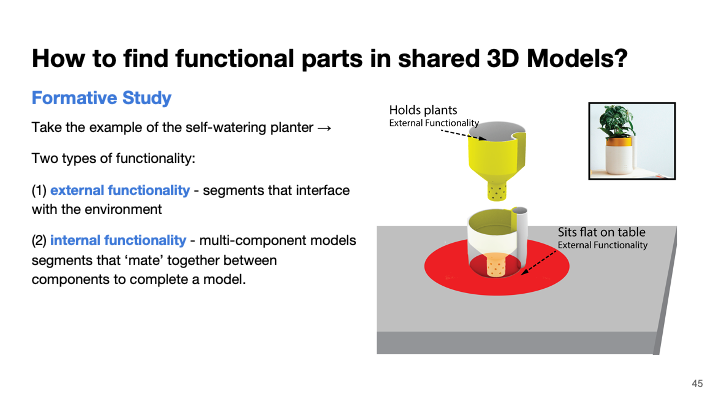

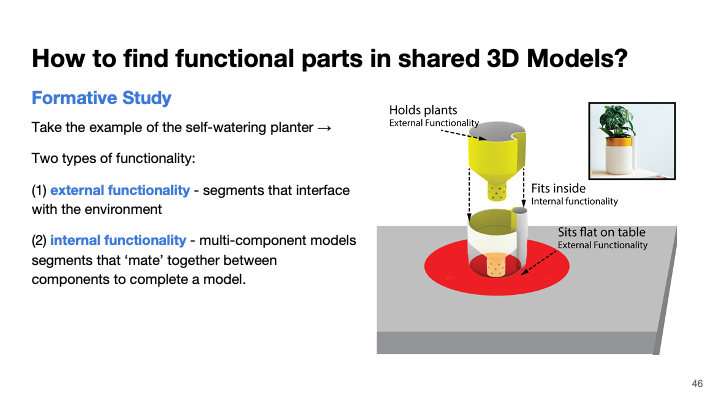

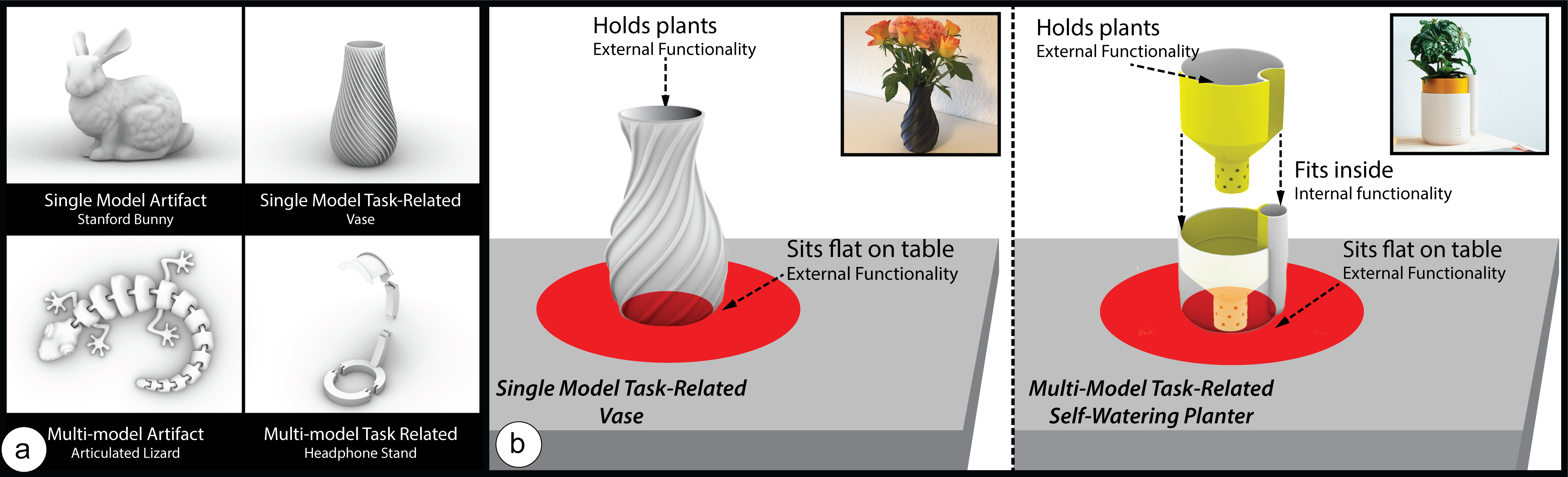

Figure 2: Categories of 3D models based on functionality: (a) We identify four categories of models defined by two dimensions: Artifact vs Task-Related and Single vs Multi-Component models. These dimensions align with differences between external and internal contexts, (b) shows an example of external and internal functionality on segments of a vase and a self-watering. planter.

Data Collection

Thingiverse is a popular online resource for novice and expert makers to share 3D printing designs or things1. While some models are shared in editable formats, most are shared as difficult-to-modify 3D meshes in OBJ and STL file formats (OBJ/STL). We collected and analyzed the 1000 most popular things on Thingiverse as of January 23rd, 2023. Although large-scale datasets exist for segmentation tasks (COSEG and PartNet), we found that sampling models from Thingiverse provides a wider variety of 3D models suited for fabrication since existing data sets are not intended for 3D printing.

We organized and standardized all 3D models included in these 1000 things. We first excluded any 3D models that were not in an STL, OBJ, or SCAD format, limiting our data to 3D meshes. We excluded all corrupted 3D models, and the ones shared without the three given formats. We converted all remaining models to the OBJ format. Next, we manually excluded duplicate meshes of varied sizes since this would not contribute to our classification of functional components. For highly-similar models shared as a collection (e.g., ‘Fantasy Mini Collection’, Thing ID: 3054701), we kept only a single mesh. After this preprocessing, we had a total of 993 different Thingiverse things, comprising 10,945 unique 3D models (i.e., objects or parts of objects).

Inductive Taxonomy Development

We used an iterative qualitative coding method to develop our tax- onomy of functionality. We first inductively coded 100 randomly selected models to develop an understanding of the functionality of these 3D-printed models. After negotiation across coders, we iden- tified two distinct categories of 3D models based on their function- ality: Artifacts and Task-Related Models. Artifacts are objects that serve primarily aesthetic purposes, such as statues. Task-Related models have been designed to help perform a specific task, such as a phone stand or battery dispenser. Both Artifacts and Task- Related models can be composed of single or multiple components assembled together (Figure 2).

From this classification of types of models, we determined two axes that we can use to classify if a segment of a 3D mesh can be modified without changing the intended functionality of the design. The first axis is external context, which describes how the surface of the segment interfaces with the real world to affect the function- ality of the model (e.g., the flat base of a planter interfaces with a table surface). Most Artifacts have few segments with external contexts, while Task-Related models have many. The second axis is internal context; a segment has high internal context if it interfaces with other segments within the same thing to affect the design’s functionality (e.g., linkages in an articulated lizard). Segments that do not have internal or external context are considered aesthetic since they do not affect functionality. Segments without internal and external context can readily be modified since they only serve an aesthetic purpose.

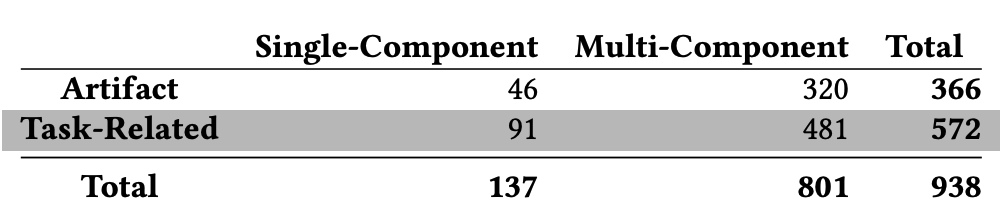

Deductive Functionality Classification

Using this taxonomy, we then labeled our entire data set of 993 models based on the two types of designs and the axes of internal and external context. For each 3D model, two annotators examined the associated Thingiverse meta-data to understand the intended functionality of the model using shared images of the model being used in specific scenarios. Independently, each annotator labeled the model as Artifact or Task-Related based on its external functionality. This resulted in a Cohen’s Kappa inter-rater reliability score of 0.94. They negotiated differences to finalize the labels for each model, reaching full agreement. Two examples of models that required negotiation are ThingID:3096598 (Chainmail) and ThingID:1015238 (Robotic Arm). The annotators resolved the former to be an Artifact because the Thingiverse page did not showcase any task-specific use case, and the latter to be Task-Related since its metadata con- tained videos describing a specific functionality. Following data classification, we removed all models that had no aesthetic seg- ments because these cannot be readily modified. This exclusion primarily removed models used for calibrating printers where any change would have changed the functionality. Our final, labeled data set contained 938 models and is summarized in Table 1.

Table 1: Counts of Thingiverse Designs based on dimensions of internal and external functionality. Rows reflect external context and columns reflect internal context.

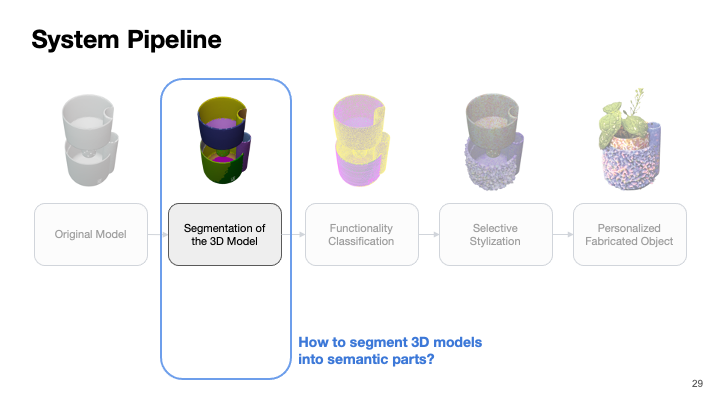

Our formative study helps us better understand the types of functional segments that affect the functionality of the model. Based on these results and our data set, we present a method for functionality-aware segmentation and classification of 3D meshes designed for 3D printing. In this section, we first define our segmentation problem and present our segmentation approach. Next, we present a method for classifying internal and external functionality on each segment of a model. We present our approach to tuning important hyper-parameters that affect the efficacy of our method and an evaluation of our classification approach compared to labels generated in our formative study. Finally, we present our Style2Fab user interface developed using this functionality-aware segmentation approach.

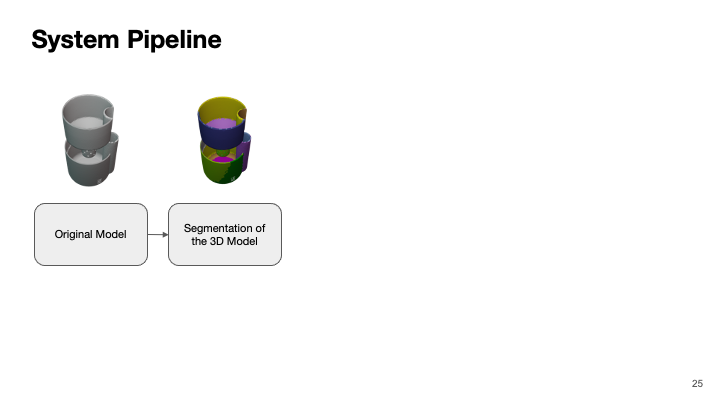

We use an unsupervised segmentation method based on spectral segmentation that leverages the mesh geometry to predict a mesh-specific number of segments. This method allows us to generalize across 3D printable models with diverse functionality. Using our Thingiverse data set, we evaluated this method for its accuracy in predicting the number of functional segments and its ability to handle a wide range of mesh resolutions.

Segmentation Approach

The process of segmenting a 3D mesh can be defined as finding a partition of the mesh such that the points in different clusters are dissimilar from each other, while points within the same cluster are similar to each other. We use a spectral segmentation process that leverages the spectral properties of a graph representation of the 3D mesh to identify meaningful segments. By examining the eigenvectors and eigenvalues of the graph norm Laplacian matrix, this method captures the underlying structure of the mesh and groups similar vertices together, resulting in a meaningful partition of the model.

Consider a 3D mesh as a graph where nodes represent a set of faces and edges represent connections between adjacent faces. The segmentation problem is to decompose the mesh into 𝑘 non- overlapping sub-graphs that represent a piece of the model with consistent features (e.g., the base, outer rim, and inside of a vase). The hyper-parameter 𝑘 can be any integer value between 1, one segment containing all faces in the mesh, and 𝑛, one segment for every individual face in the mesh. If 𝑘 is too low, the segments will not be able to isolate components with unique functionality (e.g., the base of a vase is not separate from the outside). If 𝑘 is too high, functional components of the model may be split into multiple segments and may be modified in incompatible ways (e.g., half of the base is stylized with a surface texture and the other half has the original flat surface). A key challenge in functionality-aware segmentation is automatically selecting a value of 𝑘 for each design; we do not assume that makers will be able to easily identify a good value of 𝑘 when examining a design.

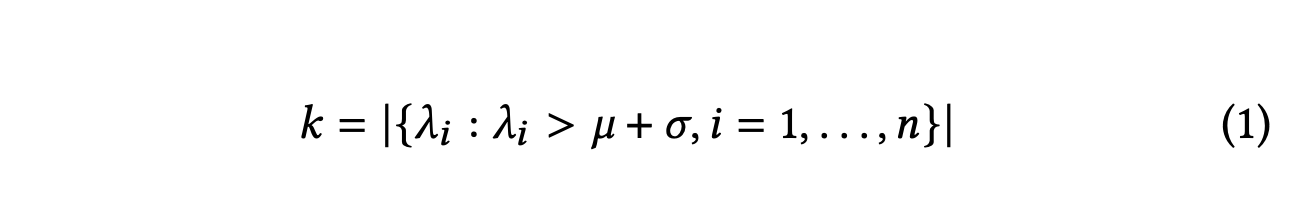

Predicting the Number of Segments: We use a heuristic-based approach for estimating a value of 𝑘 that partitions a mesh into segments that isolate functionality. Using a 3D mesh’s degree- and adjacency-matrix, we use spectral decomposition to extract an eigenbasis for the mesh. This allows us to use the resulting eigen-value distribution, representing the connectedness of a mesh, to identify a partition yielding the highest connectedness for individual segments.

We first describe the spectral segmentation approach. Given a 3D mesh where 𝐹 represents the set of faces, we first construct a weighted adjacency matrix 𝑊. The element 𝑊𝑖𝑗 represents the similarity between faces 𝑓i and 𝑓j, calculated using the shortest geodesic distance between the centers of faces 𝑓i and 𝑓j and the angular distance between them.

We use the weight matrix, 𝑊 , and the degree matrix, 𝐷 in order to compute the eigenvectors and values of the face graph. Formally defined as the norm Laplacian of a graph, 𝐿 = √𝐷T𝑊√𝐷. From the eigenvalues of 𝐿, we are able to capture the connectedness of the mesh, where large gaps between eigenvalues imply weak connectedness.

In the approach proposed by Liu and Zhang, the eigen-vectors corresponding to the smallest 𝑘 eigenvalues 𝜆 are used to construct a k-dimensional feature space, where 𝑘 is the desired number of segments. Instead of using the smallest 𝑘 eigenvalues, we analyze the entire distribution of eigenvalues 𝜆. A high standard deviation in the eigenvalue distribution indicates that the eigenvalues are spread out over a wide range, which suggests a more complex graph structure with varying connectivity and potentially multiple distinct clusters or segments. In this case, the graph may benefit from a more refined segmentation process. On the other hand, a low standard deviation implies that the eigenvalues are more tightly clustered, which suggests a relatively uniform graph structure with fewer distinct clusters. In this case, it would be sufficient to partition the graph into a lower number of clusters. We leverage this distribution to automatically calculate a value of 𝑘. Specifically, we calculate the number of eigenvalues that have a higher dispersion than the distribution’s standard deviation, using Equation 1.

Once we have extracted the lowest 𝑘 eigenvectors and their corresponding eigenvalues we follow Liu and Zhang’s segmentation method that uses k-means clustering to identify segments spanning from the 𝑛 faces captured by these high-variation eigenvectors. Based on the resulting clusters, we assign each face in the mesh graph to its corresponding segment, resulting in a segmented 3D model.

Uniform Mesh Resolution for Segmentation: This segmentation approach is dependent on a uniform resolution of a mesh; non-uniform meshes will produce incoherent segmentation as some portions of the model are represented by too few faces and other portions have too many faces. Unlike other segmentation approaches, our data set of real-world models did not have consistent resolution and this would have affected the utility of our method. Thus, we re-mesh all models to give them a uniform 25k resolution using Pymeshlab. Note that this process’ runtime increases with the resolution. Therefore, we want a low-resolution value that does not negatively affect our segmentation and classification method.

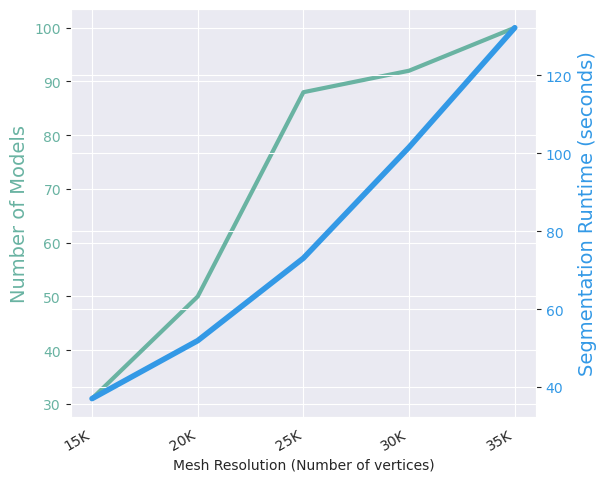

We determined that resolution to be 25k (vertices) by segmenting and comparing 100 randomly selected 3D models from our data set. For each mesh, we segmented the remeshed models with 15K, 20K, 25K, 30K, and 35K vertices. We then looked for the lowest resolution that stabilized the predicted number of segments 𝑘. That is, for all higher resolutions, the number 𝑘 did not change. For 88% of models, a 25k resolution stabilized this value. The segmentation at 25K resolution took an average of 72 seconds, while segmentation at 30K resolution took an average of 102 seconds. Figure 3 shows the effect of mesh resolution on the number of models it stabilized and the time it took to complete segmentation.

Figure 3: Comparison plots of the number of stabilized models (green) and segmentation time (blue) against mesh resolution.

Analyzing Functionality in 3D Models

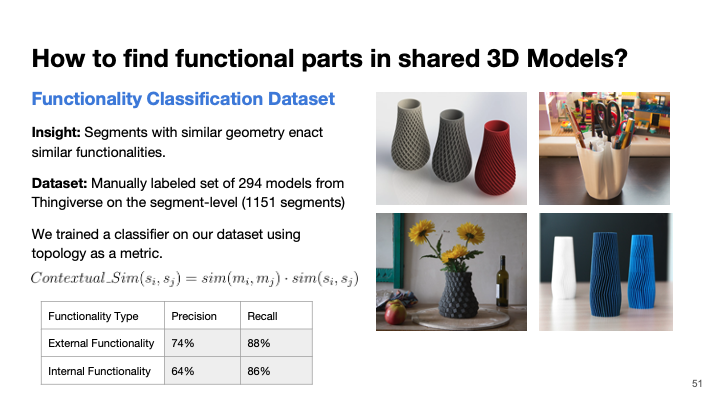

After segmentation, the system must classify each segment as functional or aesthetic. To do this we use a heuristic that infers that if a segment 𝑖 is topologically similar to (i.e., shaped like) another segment 𝑗 , the functionality of 𝑖 will be the same as 𝑗 . Thus, to classify each segment, we must find some similar topology that has already been labeled as functional. Using the taxonomy of internal and external functionality from our formative study, we can break up the problem of finding a similar, labeled segment 𝑗 into two approaches: (1) we analyze external functionality by identifying topologically similar segments in models in our Thingiverse data set, and (2) we determine internal-functionality in multi-component models by identifying linkages between components based on topologically similar segments. However, it is critical to compare segments and their parent meshes because comparing only segments introduces noise. Segments without the context of their parent mesh are just geometrical features that, while topologically similar, are likely to be used in different ways.

Using Similarity as a Heuristic: We hypothesize that similar models will use similar geometries to enact a similar functionality. We use the approach of measuring topological similarity from Hilaga et al., which uses a Multiresolution Reeb Graph representation (MRGs) of meshes to analyze the similarity. This method is ideal for our domain due to its invariance to translation, robustness to connectivity variations, and computational efficiency. Note that we can use this method on both whole meshes and individual segments since a segment is, itself, a mesh of connected faces. Thus, given segment 𝑠𝑖 in a mesh 𝑚 𝑖 and segment 𝑠𝑗 in mesh 𝑚𝑗 , the contextualsimilarity 𝐶𝑜𝑛𝑡𝑒𝑥𝑡𝑢𝑎𝑙_𝑆𝑖𝑚(𝑠𝑖,𝑠 𝑗) is the product of the similarity between the segments and their parent meshes (Equation 2). This gives us a value between 0 (i.e., a complete topological mismatch) and 1 (i.e., identical topology).

Given a segment 𝑠 in mesh 𝑚 and a set of other meshes M, we can find the similarity between 𝑠 and all of the segments S in the other meshes. From these similarity values, we decide the label of 𝑠’s functionality by comparing it to the labels on the subset of S that are most similar S𝑠𝑖𝑚. We take a uniformly weighted vote of the labels on each segment in S𝑠𝑖𝑚 and classify 𝑠 as the majority label. Regardless of the similarity of these most similar segments, we weigh their label votes equally. We empirically found that the accuracy of functionality classification converges after comparing 𝑠 to five other similar segments (i.e., |S| = 5).

Now that we have a method of labeling a segment based on a related set of pre-labeled meshes M, we must find mesh sets that help us to identify, separately, internal and external functionality. The size of M will have a significant effect on the time it takes to compute segment similarity. For each mesh 𝑚𝑖 in the set, there will be 𝑘𝑖 segments to compare to 𝑠. Thus, as the size of the mesh set increases the number of similarity comparisons increases by a factor of 𝑘𝑖 and quickly becomes too time-consuming to compute. To classify functionality, we need to find a small set M that provides the most information about the segment with the least amount of noise. Our insights into the differences between internal and external functionality help us to select good sets of meshes.

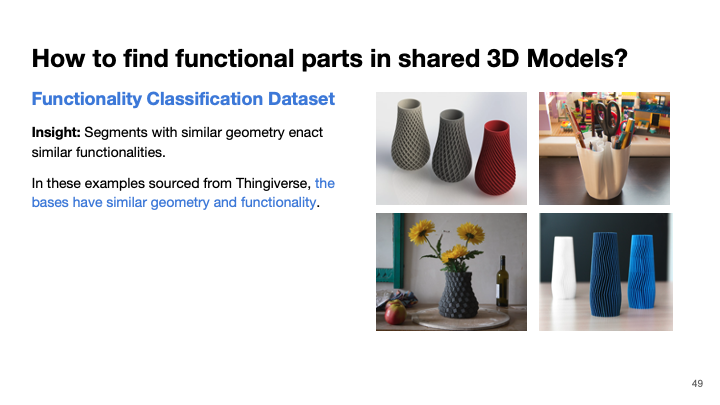

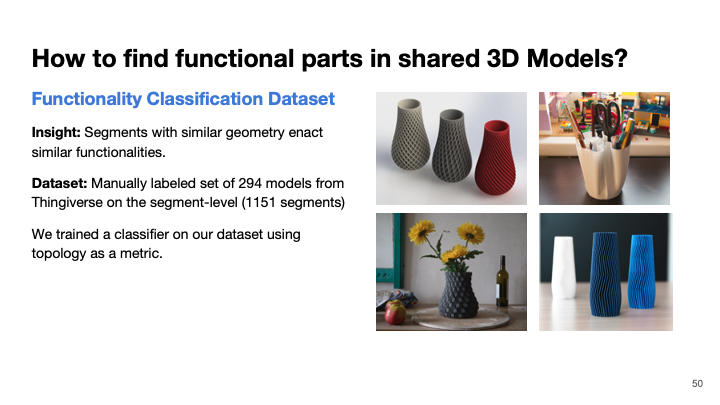

Classifying External Functionality: To identify external functionality, we compare segments to models that function similarly using similar geometric features. We built a labeled data set of segmented models from Thingiverse for identifying external functionality from the 46 Artifacts and 91 Task-Related single-component models in our data set. First, we segmented all of these models using our segmentation method and produced 1151 different segments. Then two annotators analyzed each segment with contextual information from the parent model’s Thingiverse page and independently labeled the segment as Aesthetic or Functional. We also asked the annotators to independently label a segment if it contained an Aesthetic and Functional component fused together (inefficient segmentation). After reviewing all segments, they had an inter-rater reliability of 0.97. They negotiated all disagreements to produce our ground truth classification of segment type. At the end of the study, 51% of the segments were annotated as Aesthetic, while 49% segments were annotated as Functional. From the functional segments, the annotators agreed that 24 segments (2%) from 17 different (12.4%) models were composed of Aesthetic and Functional elements fused together, leading them to annotate the entire segment as Functional.

Naively, we could classify the external functionality of a segment 𝑠 in a mesh 𝑚 by comparing it to all meshes in this data set. However, this would be computationally expensive and introduce noise since segment-level similarity may occur between segments that are used in different ways in models with different uses. Thus, we prune the set of labeled models by first comparing mesh-to-mesh similarity. Thus, we collect the set of most-similar meshes M𝑠𝑖𝑚 from our data set. As shown in Section 4.2.1, we found that five meshes were sufficient. Considering External Functionality to be a binary label, we got a Precision score of 74% and a recall of 88%. Hence, this is a conservative approach towards functionality prediction, where the classifier has a higher false positive rate. It is more important that the system does not modify functional components than that it misses aesthetic components.

Classifying Internal-Functionality: Similar to the case of external context, we can identify internal functionality by comparing a segment to a set of segmented models with similar internal functionality. However, internal context is dependent on similarity within a group of meshes that make up a mechanism. Two segments have internal functionality if they interface with each other to form a linkage in a mechanism. Thus, our problem was to identify pairs of segments between components (multiple models that compose a single mechanism) and classify them as internally functional. We approach the problem in the following manner: We first create the set (𝐶𝑜𝑚𝑏𝑖𝑛𝑎𝑡𝑖𝑜𝑛𝑠(M𝑚)) containing all possible pairs of segments not from the same mesh. For any model 𝑚, we can now search over all possible pairs of segments between different meshes from the set 𝐶𝑜𝑚𝑏𝑖𝑛𝑎𝑡𝑖𝑜𝑛𝑠(M𝑚), and identify similar segments.

In this case, instead of assigning the label by taking a vote across the five most similar segments (i.e., S𝑠𝑖𝑚), we decide that there is a linkage between a segment 𝑠 and another segment 𝑠𝑐 , in another component mesh, if they have a similarity value greater than the hyper-parameter 𝛼. In the case that multiple segments have high enough similarity, 𝑠𝑐 will be the segment with the highest similarity to 𝑠. Once identified, we label both 𝑠 and 𝑠𝑐 as having internal functionality.

To increase efficiency, we prune the number of segment comparisons in this process. First, we can exclude all segments in meshes that have been labeled as having external functionality. Ultimately, we are looking for a single binary label between aesthetic and functional, so it does not matter if a segment is both internally and externally functional. Second, as we identify linkages we can remove both 𝑠 and 𝑠𝑐 from future comparisons within the mechanism because linkages are formed of only two segments.

To decide that two segments are sufficiently similar to be considered a linkage, we need a threshold similarity value of 𝛼. To identify this threshold we gathered ground truth data from our data set. Two researchers annotated segments of the multi-component models in our data set to identify linked segments. We randomly selected 50 things with multiple components which contained a total of 157 component meshes. For each model, two annotators independently labeled all pairs of segments that formed a linkage in the mechanism resulting in an inter-rater reliability score of 0.99. They negotiated disagreements and produced a ground truth dataset. From this data set, we identified an effective threshold similarity score 𝛼 = 0.86 by evaluating the precision and recall across multiple 𝛼 values. We found that 𝛼 = 0.86 maximized the identification of functional segments and then minimized the misidentification of aesthetic segments. By this analysis, we got a precision value of 64% and a recall value of 86%. Like classifying external context, we prioritize high recall over precision since the cost of missing a functional segment will break a model while missing an aesthetic segment will only affect aesthetics. Therefore, we opted to have a more conservative classifier for both the internal and external functionality.

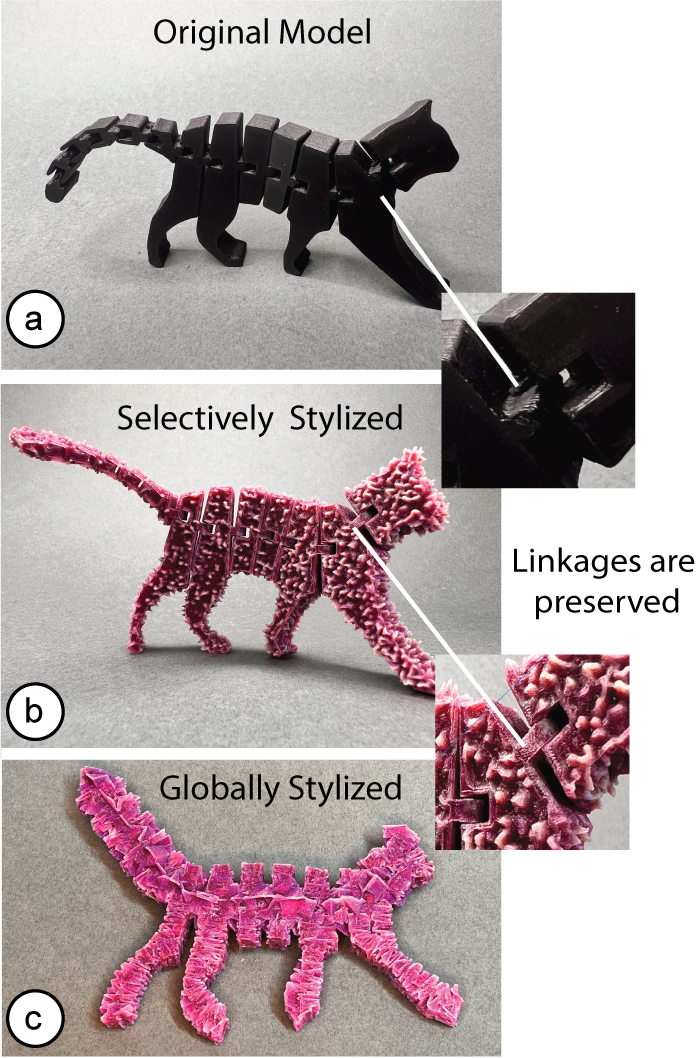

Figure 4: A demonstration of the differences between functionality-aware and global styling. A “flexi cat” model (Thing: 3576952) is shown (a) without styles, (b) with functionality-aware styles, and (c) functionally broken by global styles.

Stylization of Segments

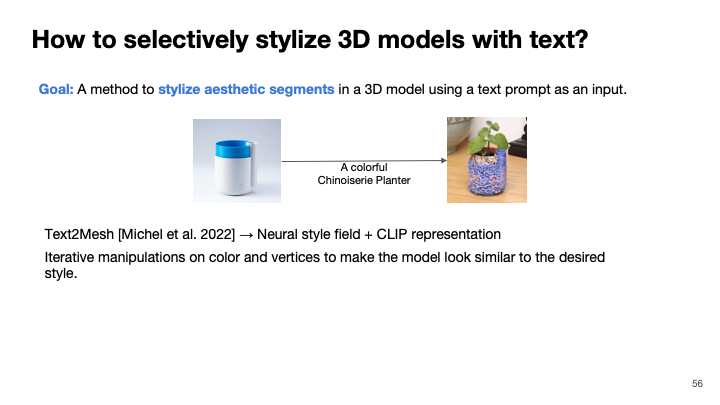

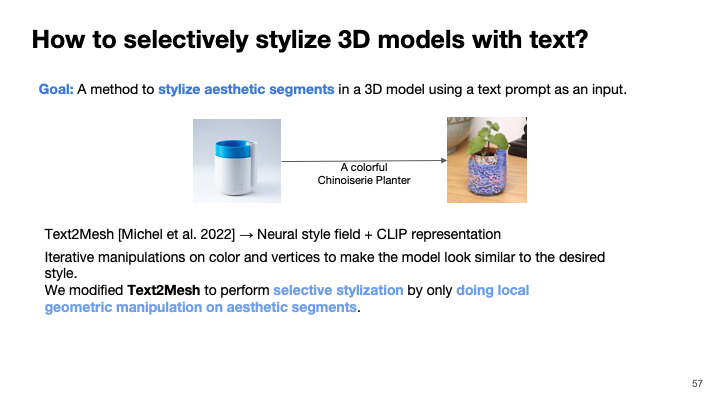

We use Text2Mesh to stylize models based on text prompts. Text2Mesh uses a neural network architecture that leverages the CLIP representation. The system considers a 3D model as a collection of vertices, where each vertex has a color channel (RGB) and a 3D position that can move along its vertex normal. Text2Mesh reduces the loss between the 3D model rendering (CLIP representation) from different angles and the CLIP representation of the textual prompt using gradient descent. Text2Mesh makes small manipulations in both the color channel and vertex displacement along the vertex normal for each of the vertices in order to make it look more similar to the text prompt. This allows the system to generate a stylized 3D model that reflects the user’s desired style.

This method will stylize the whole mesh and change the topology of the functional segments. In Figure 4a-c, we show that global stylization can render a functional object, in this case, an articulated cat, inoperable. We augment this system by adding an additional step of masking the gradients and setting functional vertices to zero. This allows manipulation of the color and displacement channels while preserving the functional segments of the model. As specified in Text2Mesh, we run this optimization for 1500 iterations.

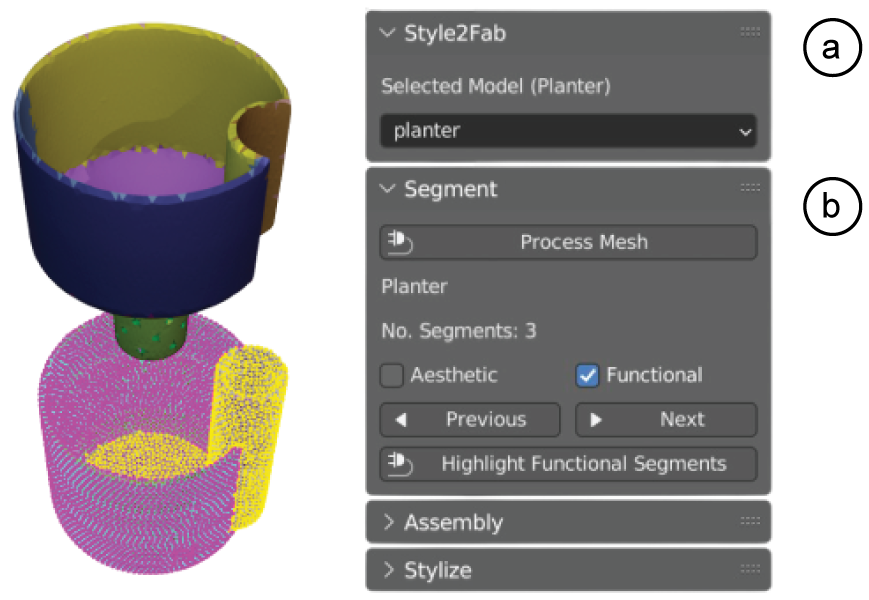

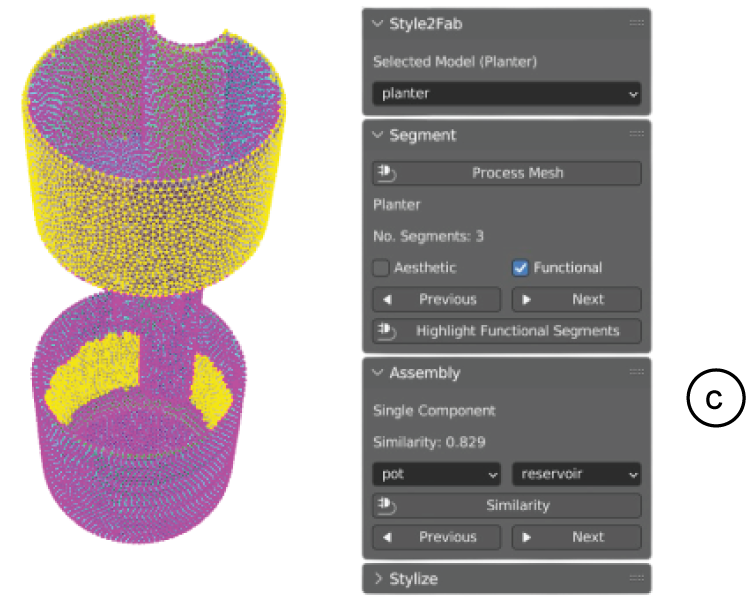

Figure 5: In the Style2Fab UI, the user (a) loads their model and (b) browses the functionality labels after segmentation. (c) In the case of multi-component models, the user can examine and adjust linked segments between components.

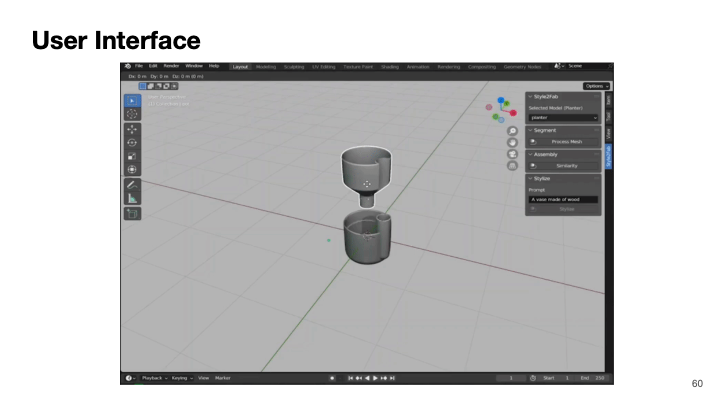

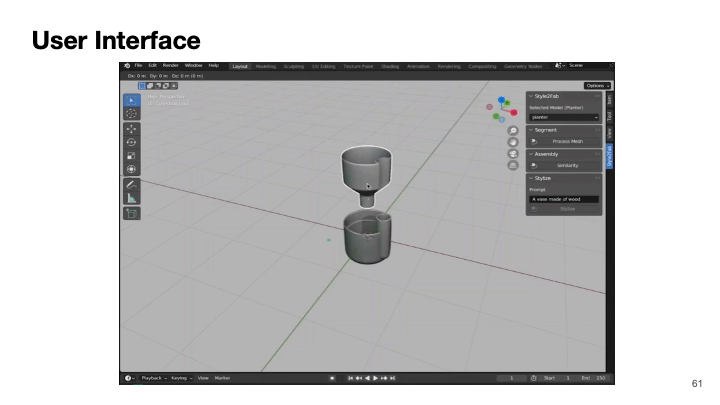

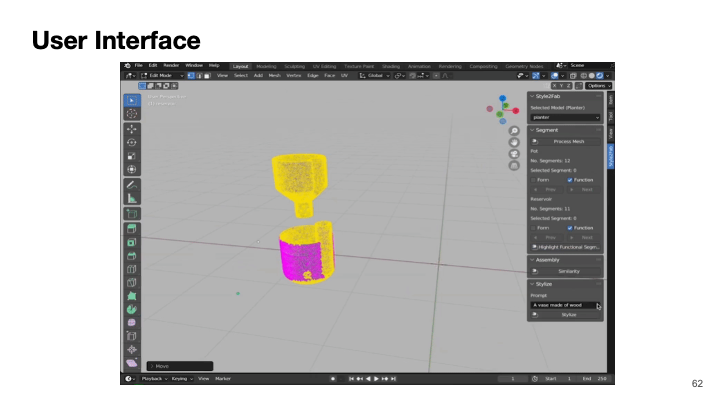

Style2Fab User Interface and Workflow

Style2Fab is a plugin for the open-source 3D design software tool Blender. To stylize a model with Style2Fab, the user must: (1) pre- process their model for segmentation and stylization, (2) segment and classify the functionality of each segment, (3) selectively apply a style to segments based on functionality, and (4) review their stylized model. We break these tasks up into four menus in the user interface (Figure 5).

Pre-Processing and Segmentation: Once the user has loaded an OBJ file of their 3D mesh into the plugin, they “Process” the model to standardize its resolution and automatically detect the number of segments (i.e., 𝑘) needed to classify functionality across the model (Figure 5b). By default, the resolution is set to 25k faces based on our evaluation. Next, the system will segment the model and give each segment a unique color to help the user visually identify the segments. If the user wants more or fewer segments they can modify the value of 𝑘 in the interface and re-segment the model. For multi-component models, the user can load multiple meshes representing each component and segment them in parallel.

Functionality-Verification of Segments. After segmentation, the plugin opens panels displaying the type classification of each segment. Users can then review each segment and determine if they agree with the classification. The user’s goal is to identify the set of segments that should not be stylized to preserve the desired functionality of the design. To simplify this process, the user can select “Highlight all functional segments” (Figure 5c) to have all segments classified as functional highlighted in the user interface. If they agree with this segmentation, they can move on to stylization. Otherwise, they can individually review all segments. Next, we describe the process for the user to review individual segments.

First, in order to verify externally functional segments, the user can walk through the model’s segments and toggle the functionality class based on their interpretation of the model. When walking over a segmented model via the interface, the segments are highlighted on the model (Figure 5b).

When working with multiple components, the user can review the segments that were classified as having internal context based on linkages between components of the model. The user can use the "Assembly" panel (Figure 5c) to see pairs of connected segments on distinct models, and click ‘Separate’ for incorrect assignments. If the user disagrees with this classification, they can adjust this similarity parameter 𝛼 between 0 and 1.

Selective Stylization of Aesthetic Elements: Post verification of functionality, users can stylize aesthetic segments of a 3D model by entering a natural language description of their desired style and clicking "Stylize Mesh". The completed model is then rendered alongside the original for review. Users can iterate on this process and apply new styles using new text prompts, or re-segment the model as needed.

USER STUDY

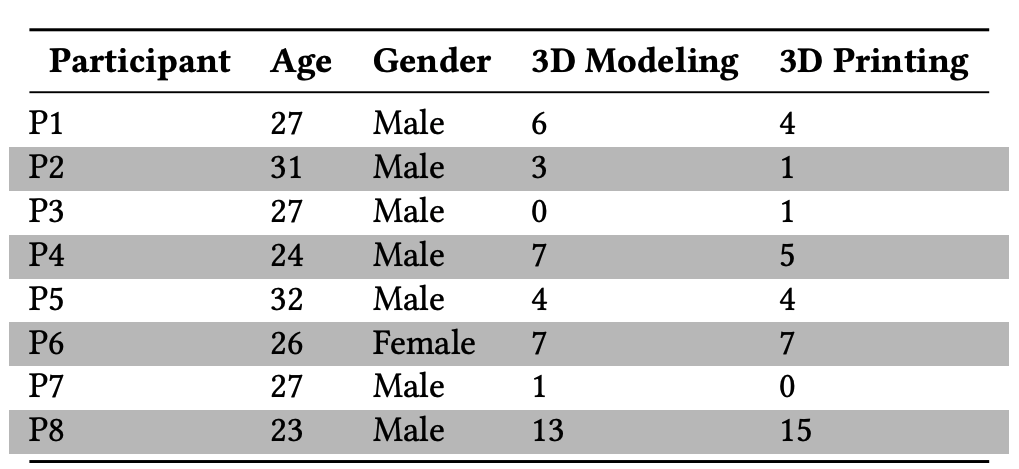

Table 2: Participant demographics and years of experience with 3D modeling and printing.

To evaluate if our functionality-aware segmentation method supports users in separating functional elements in 3D that they did not design, we had eight university students (Table 2) with varied 3D modeling and printing experience segment and stylize 3D models from our Thingiverse data set with and without automatic support from Style2Fab.

We randomly selected eight 3D models representing each of our four categories of models based on internal and external context: two single-component Artifacts, two single-component TaskRelated models, two multi-component Artifacts, and two multicomponent Task-Related models. We segmented each model using our segmentation method and automatically classified the functionality of those segments. Each participant was presented with two models with segmentation and functionality classification (i.e., experimental group) and two models that were only segmented and that they needed to manually classify(i.e., control group). In each condition, the participant received an Artifact and Task-Related model. One of these was always a single component and the otherwas a multi-component model. We controlled for model-specific and learning effects by giving each participant a different combination of models and conditions. We asked participants to classify each segment in each model as functional or aesthetic. In the experimental condition, participants could accept or modify our functionality-aware classification. In the control condition, they had to make a manual classification. After classifying the segments, the users were asked open-ended questions about their experience. They were compensated with $20 for the hour-long study.

Findings

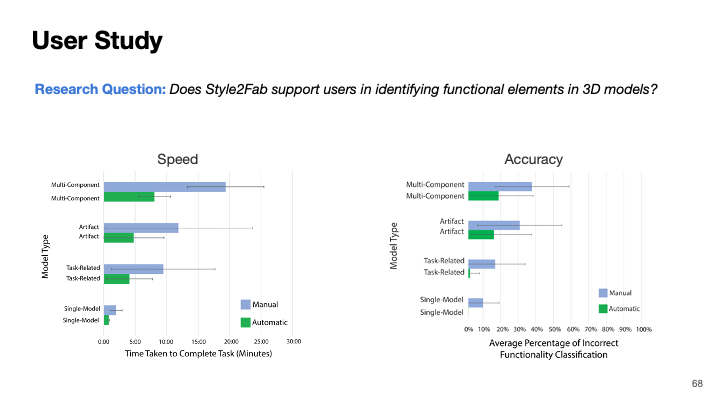

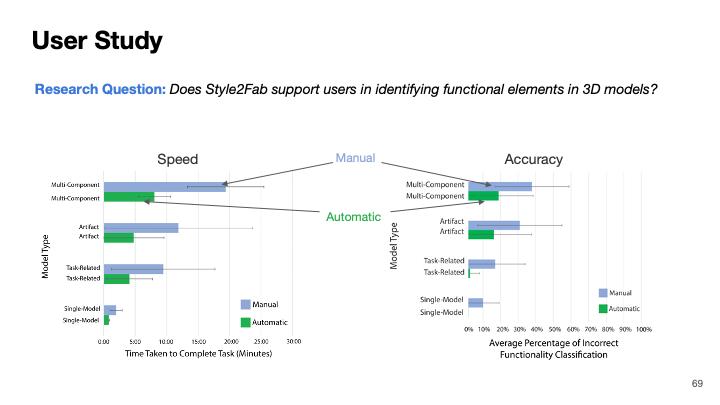

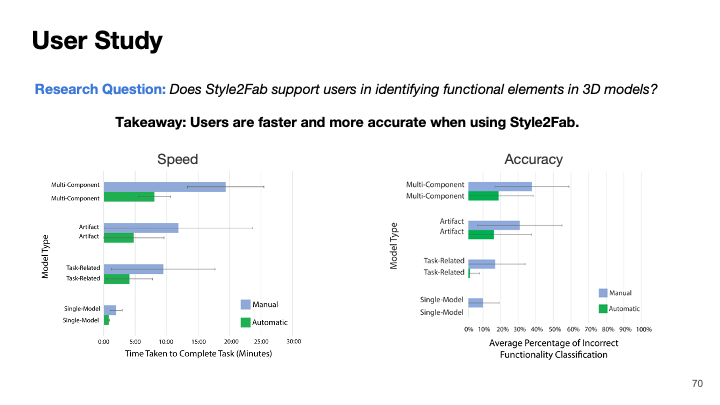

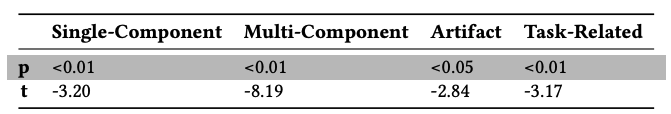

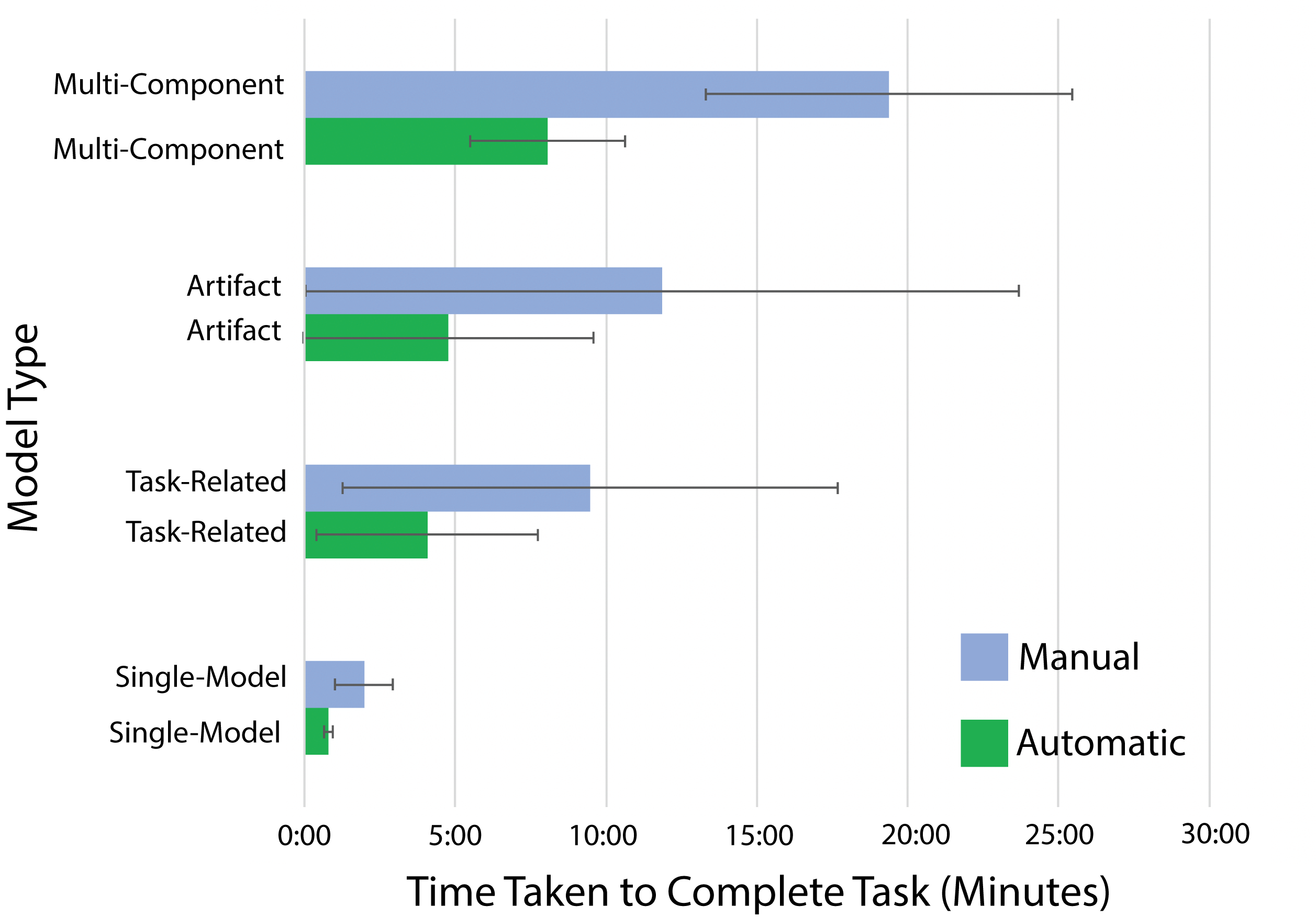

In order to understand the differences between the automatic and manual conditions, we compared the time taken to process a model, which included the classification runtime for the automatic condition, and the accuracy and precision of the classification (Figure 6). The time taken by the users was dependent on the complexity of the model, with the single-component model taking the least time, and the multi-component models taking significant time. We conducted a paired one-tailed t-test with 7 degrees of freedom to see if functionality-aware segmentation significantly improved task completion time. Across all types of models, we found a significant improvement in task completion time at the 𝑝 < 0.05 level (Table 3, Figure 6). The greatest effects were on models with greater complexity such as multi-component models and Task-Related models with multiple segments that had external context.

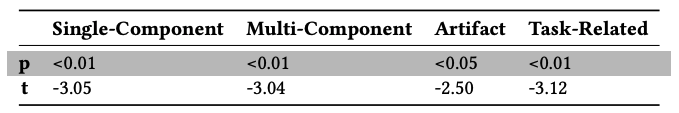

Table 3: T-test comparison of task completion rates within subjects. All values are significant.

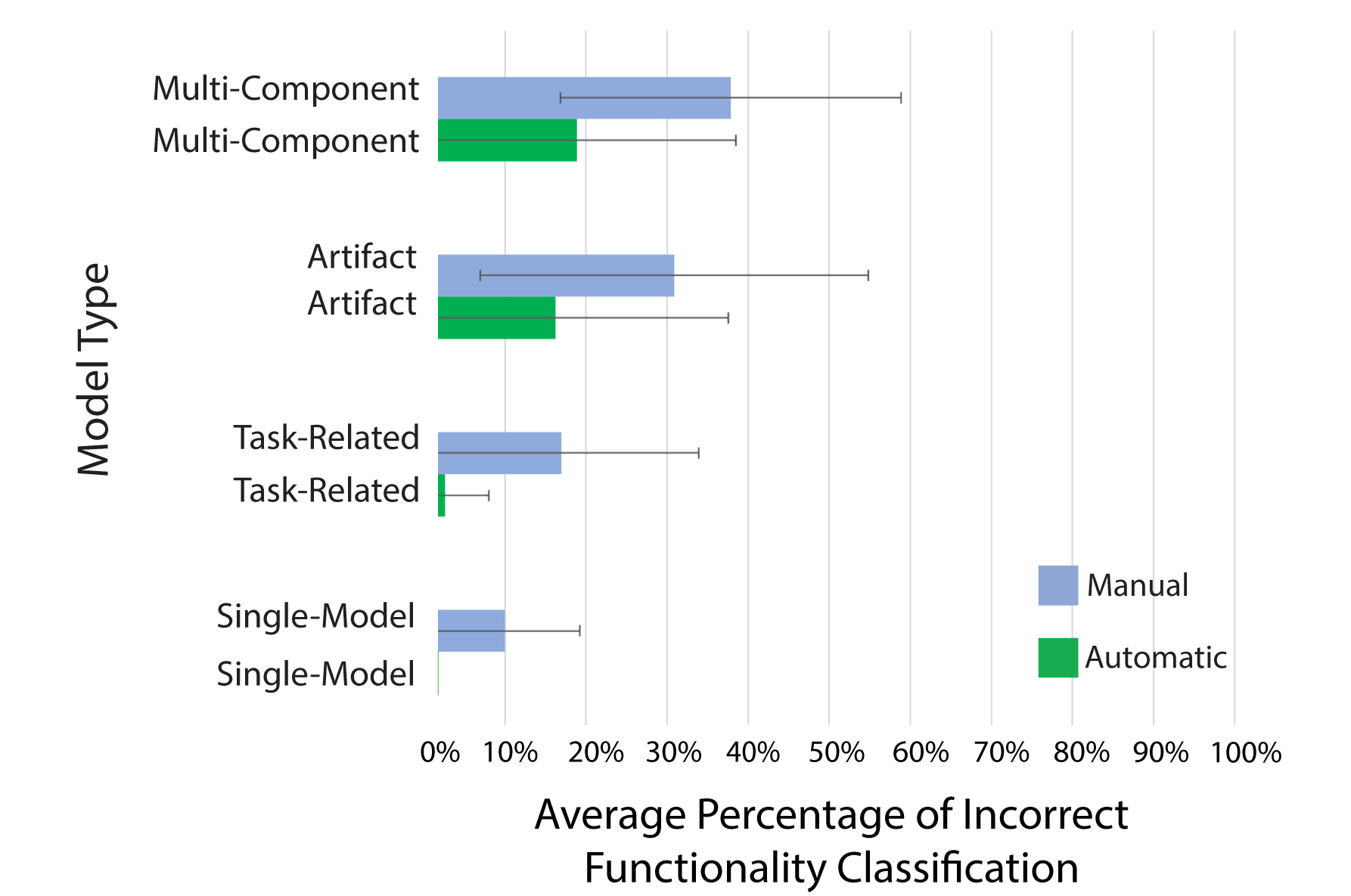

To analyze the functionality classification accuracy, we compared the user annotations from the study with the ground truth. To generate the ground truth, two authors annotated the models collaboratively and then fabricated the original and stylized versions to verify functionality preservation. On comparing the user annotations with the ground truth, we found that users were more accurate in single-component models. But as the complexity increased, users had a hard time finding functional segments. The automatic classifier significantly improved performance in finding functional segments with the difference in performance increasing as the complexity increased (Table 4, Figure 7).

Figure 6: Box plots show the distribution of task completion times by condition. The more complex the model, the more time users save using the automatic classification of functional segments.

Figure 7: Box plots show the distribution of classification accuracy by condition. As the complexity of the model increased, the automatic classification helped users identify functional segments more accurately.

Table 4: T-test comparison of classification accuracy within subjects. All values are significant.

The participants generally had a positive experience stylizing models with Style2Fab. P7 said in their open-ended interview that “being able to know if it is best as decorative or functional for areas I am uncertain of is helpful. It felt like I was approving choices rather than making them”. P3 said “highlighting and automation made the process more precise”, which according to P4 “makes it easier to prepare components for [...] mesh editing”. Discussing the segmentation results, P5 also commented that “the segmentation of parts is much more uniform with Style2Fab”, while P6 said “models were usually segmented well, but the results could be better in some more complicated models”. P8 also said, “it usually got things right, but segmentation was too broad sometimes”. Commenting on the experience with the Automatic version, P6 said “this way I don’t have to think through each one, I can take the suggestion and say yes or no”. Overall, participants enjoyed stylizing 3D models with Style2Fab because the functionality-aware segmentation method made them confident their personalized models would work post-fabrication.

In summary, the results of the user study demonstrate significant differences between the automatic and manual conditions in terms of the time taken to process models. The analysis shows that Style2Fab’s support is more beneficial as the complexity of the model increases, supporting the effectiveness of the proposed functionality-aware segmentation method.

DEMONSTRATIONS

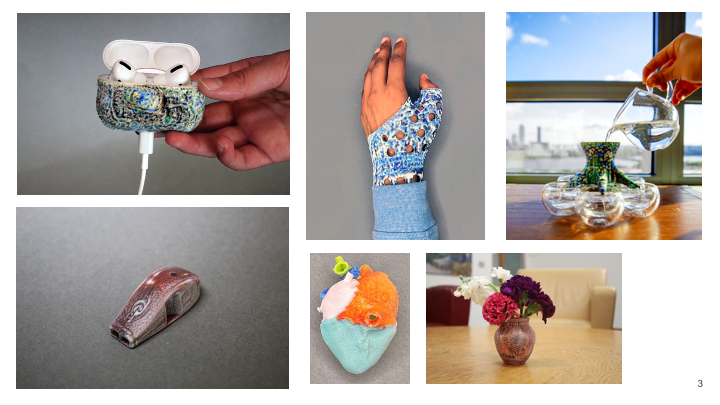

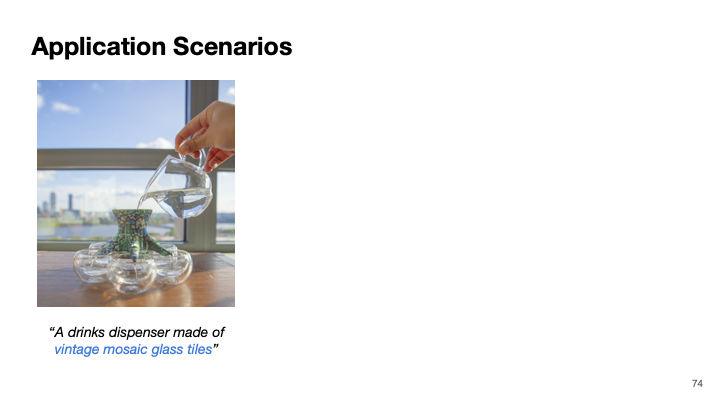

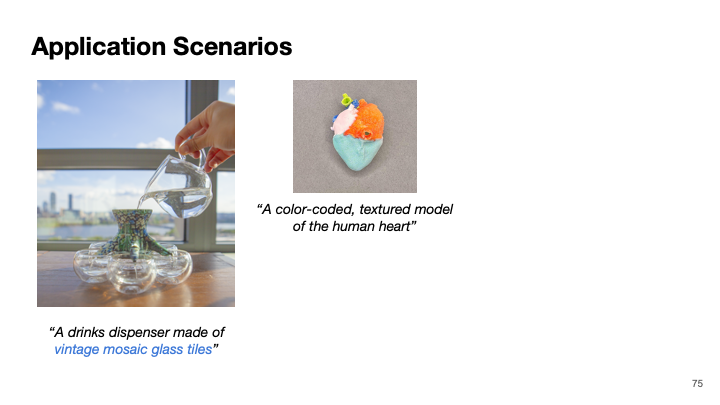

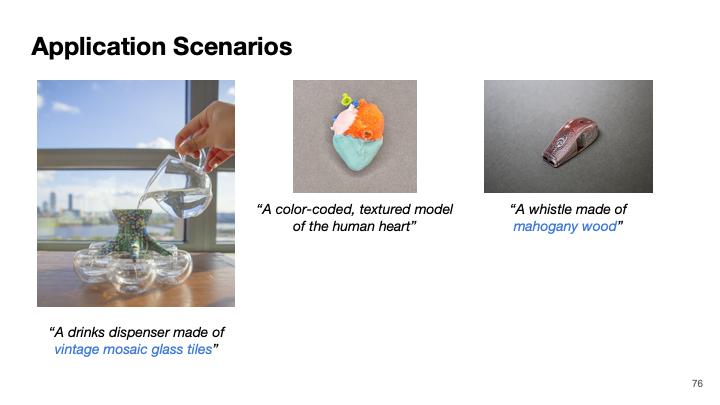

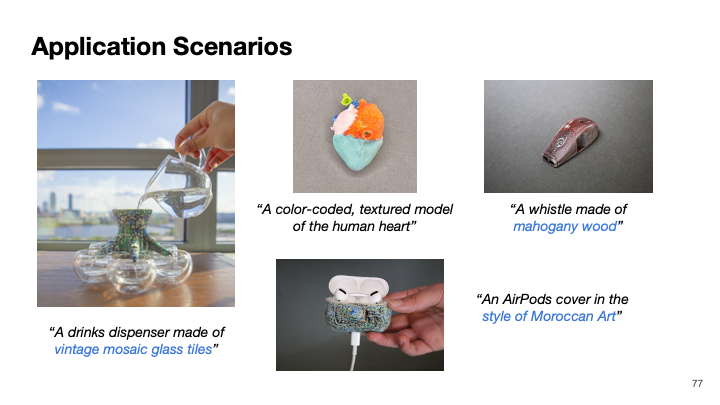

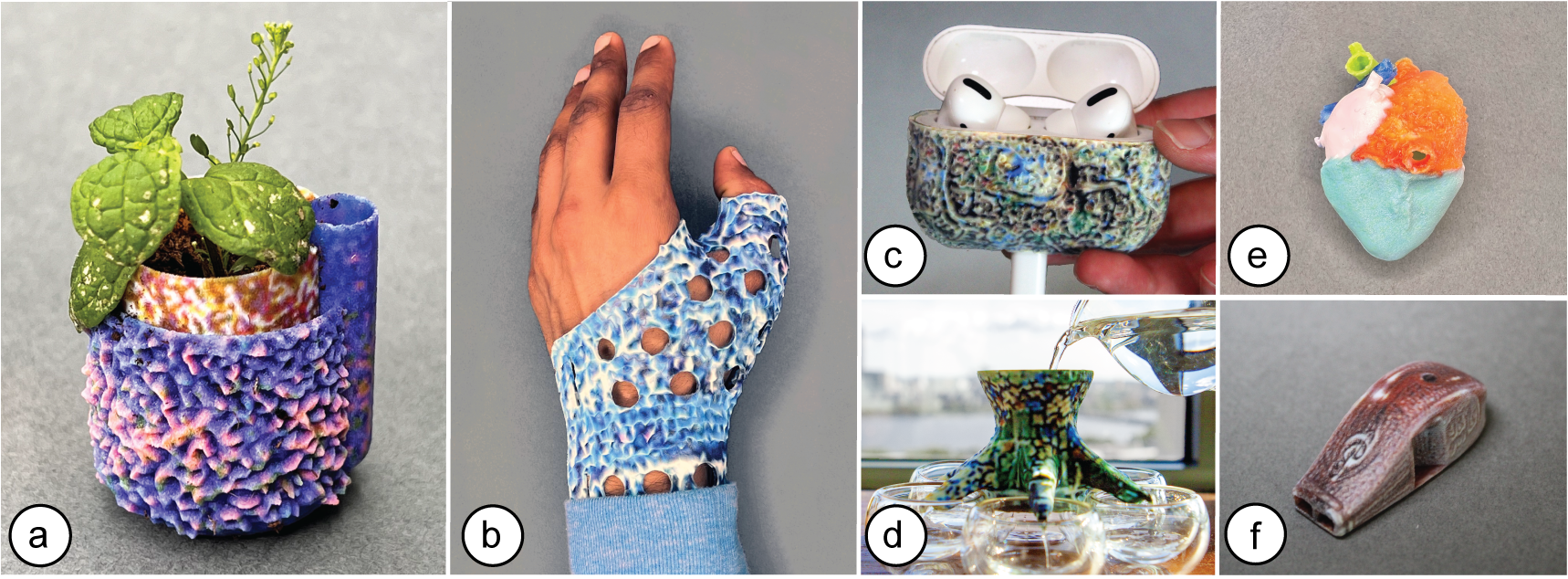

In this section, we showcase Style2Fab’s functionality-aware stylization through six application scenarios across four categories: Home Decor, Personalized Health Applications, and Personal Accessories. These are all examples of Task-Related models, and they highlight the versatility of tools that use functionality-aware segmentation to personalize models.

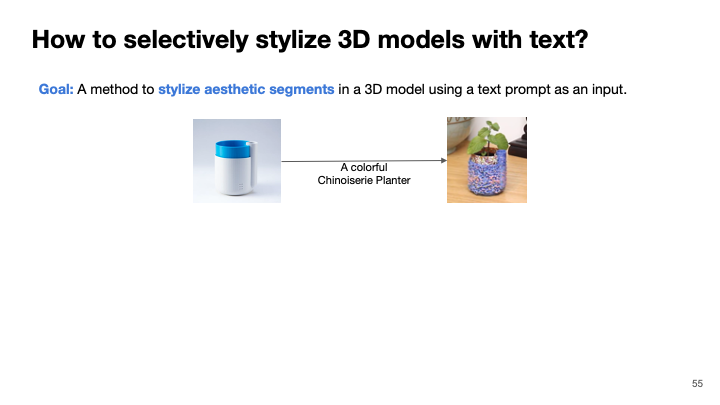

Home Interior Design

Interior design is a popular domain for personalized fabrication. Here, we demonstrate customizing a Self-Watering Planter3, a TaskRelated model containing two components (containing internal and external functionality). The internal functionality for this model relates to the assembly of the pot and reservoir, while externally functional segments include the base and watering cavity. Using Style2Fab, we segmented the model, verified the functional aspects, and applied the “rough multi-color Chinoiserie Planter” style. The fabricated model showcases the desired aesthetics without compromising its self-watering capabilities or stability (Figure 8a). Another home decor example is the Indispensable Dispenser4, a drink dispenser that distributes liquid into six containers via interior cavities and spouts. We used Style2Fab to preserve the functional segments (base and interior cavities) and applied a “colorful water dispenser made of vintage mosaic glass tiles” style to the aesthetic segments. The resulting dispenser, shown in Figure 8d, retains its functionality while exhibiting the desired visual appearance.

Medical/Assistive Applications

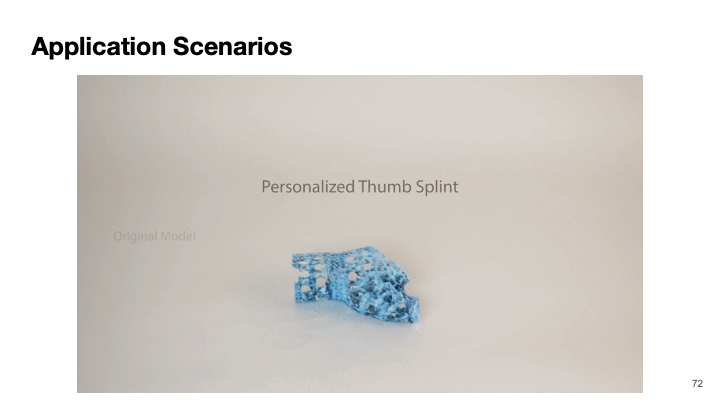

“Medical Making” and “DIY Assistive Technology” are emerging and critical domains for personalized fabrication by nontechnical experts. Social accessibility research shows that considering both the aesthetic and functional features of medical/assistive devices increases their adoption. However, individuals with disabilities and their clinicians may not have the time or expertise to personalize devices. We first demonstrate stylizing a geometrically-complex thumb splint sourced from Thingiverse5 to appear as “A beautiful thumb splint styled like a blue knitted sweater.” Like the participant from Hofmann et al., we wanted the model to blend into the sleeve of a sweater (Figure 8b). Our functionalityaware segmentation method preserved the smooth internal surface that contacts the skin and holes that increase breathability. The exterior is stylized and attractive

Our second example stylizes a tactile graphic of a human heart to apply unique textures to each region of the heart. This would make identifying each region easier for a blind person who accesses the model through touch. Our automatic segmentation and classification method identifies different segments of the heart that can be stylized with different textures (Figure 8e).

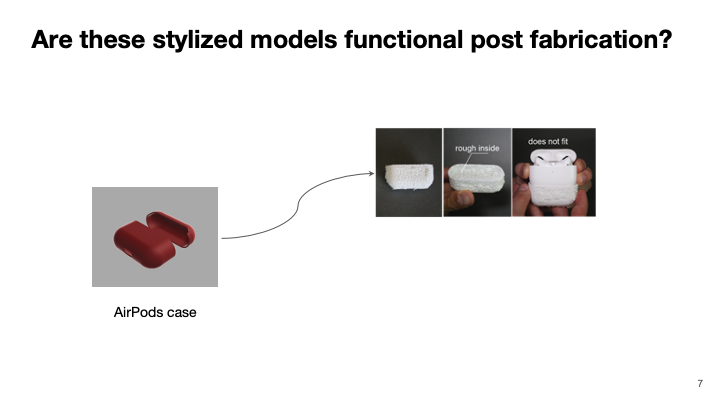

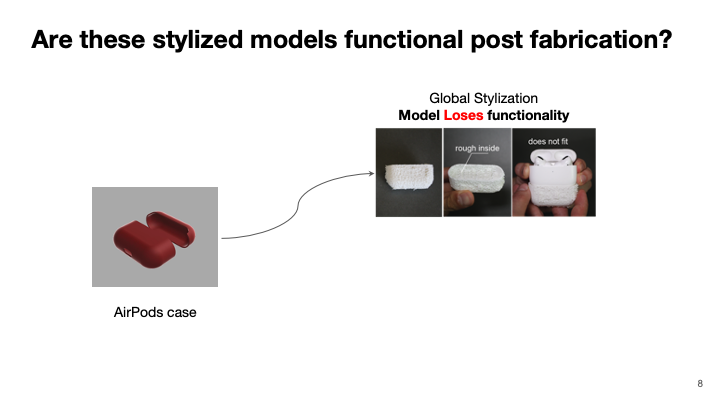

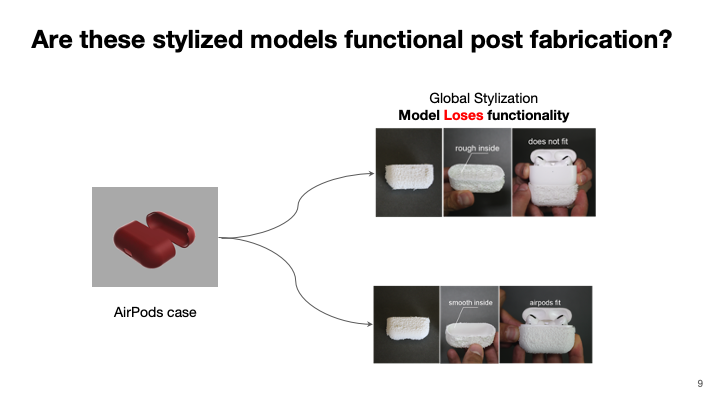

Personalizing Accessories

In this application scenario, we demonstrate how functionalityaware segmentation can be used to personalize accessories, such as an Airpods cover and a whistle. We demonstrate Style2Fab’s personalization capabilities using a Thingiverse Airpods case. The interface segmented and preserved the functional aspects, including internal geometry and charging cable hole even though our classification method has no specific information about the external objects the cover interacts with. We stylized this model with the prompt: “A beautiful antique AirPods cover in the style of Moroccan Art (Figure 8e). The resulting model fits the AirPods Pro case and allowed charging while featuring Moroccan Art-inspired patterns. Next, we applied styles to the popular V29 Whistle from Thingiverse without compromising its acoustic functionality. The system preserved the whistle’s resonant chamber and mouthpiece while styling the exterior with a prompt: “A beautiful whistle made of mahogany wood”. The functionality-aware styled whistle sounds like the original whistle (Figure 8f) while the globally-styled whistle lost its functionality due to internal geometry manipulation.

DISCUSSION

Our functionality-aware segmentation method differs from prior work because it assumes that the user will struggle to translate their understanding of the functionality of a model into key parameters of a segmentation method and specific labels for each segment. We return to the scenario of Alex stylizing her self-watering planter. Alex can recognize the pieces of the model that contribute to the self-watering functionality and the pieces that she wants to stylize. But translating this into parameters of a segmentation method is non-trivial – Alex would either have to go through trial and error in adjusting the hyperparameters for segmentation, or tediously highlight the segments on the vertex-level. Our functionality-aware segmentation method provides a semi-automatic method for separating aesthetic and functional segments allowing Alex to stylize her model while retaining the functionality.

We designed our functionality-aware segmentation method to be modular and adaptable to modifications to the underlying methods. For instance, Style2Fab can be augmented with a more nuanced classifier to allow functionality beyond external and internal contexts. In the next sections, we identify limitations and opportunities to improve on the concept of functionality-aware segmentation

Figure 8: Application scenarios for Style2Fab (all models sourced from Thingiverse): (a) A multi-component self-watering planter styled as “A rough multi-color Chinoiserie Planter”. (b) A personalized Thumb Splint styled like “a blue knitted sweater”. (c) A personalized AirPods cover “in the style of Moroccan Art”. (d) A Drinks Dispenser model styled as “made of vintage mosaic glass tiles”. (e) A color-coded, textured educational model of the human heart. (f) A functional whistle styled as “A beautiful whistle made of mahogany wood”

to adapt it to more complex and diverse domains. We expect this method could be improved by broadening our definition of functionality, creating a larger dataset of labeled classes of functionality, and using more nuanced evaluations of segment similarity.

A Broader Definition of Functionality

Form and function are deeply related but do not have a one-to-one relationship; many forms can perform the same task and many tasks can be achieved with multiple forms. In our approach, we defined functionality as a topology-dependent property and ignore usage context (e.g., hanging a vase vs setting it on a table). In our formative study of Thingiverse models, the annotators infer a specific context when labeling each model’s relationship between form and function. This is reliable because each model has specific affordances (e.g., a flat base affords resting on a flat surface). However, makers are creative and often play with the affordances of models to use them in new ways.

A more nuanced approach would be to classify specific affordances. Our taxonomy presents a high-level set of affordances for interacting with external and internal contexts. However, creating a wider set of affordance-specific labels presents a trade-off. Some affordances are rare, and most classification methods struggle to label rare events. This calls for a wider set of functionality labels, beyond our taxonomy of external and internal functionality. Visual affordance is a crucial problem in robotics, with new approaches and datasets bringing insights into the domain. One such example is 3DAffordanceNet which contains annotated data for 23 object categories. The essential difference between the curated deep learning datasets and online sharing platforms, is the long-tail distribution of possible designs. Although the classes represented in these datasets are represented in online repositories the open-sharing and creative platform allows users to share novel and unique ideas, which is opposite to the standardization principle of these datasets. Thus, there is a need for novel data collection and analysis methodologies that will allow us to apply deep learning methods to analyze fabrication-oriented data.

Limits of Topological Similarity

In addition to limiting our classification to two types of functionality affordances, our classification approach relies on a measure of segment and model similarity that only accounts for topological features. We selected this method because it is robust, computationally efficient, and does not rely on expensive human-generated labels. However, other measures of similarity or a measure that accounts for multiple factors may have produced a more effective classifier. More refined datasets, such as semantic labels of functionality for segments, along with a larger number of models would provide a more robust and informative approach to functionality-aware segmentation.

Opportunities for Richer Data Sets

We can improve our functionality-aware classification by expanding our functionality data set and applying nuanced similarity metrics. However, like any other classification domain, this requires either larger sets of unlabeled real-world data or better labels and metadata for existing samples. Unfortunately, 3D modeling and printing domains do not currently lend themselves to creating these types of data sets. Ideally, we could apply more advanced deep-learning methods to classify functionality, however, to account for the diversity of real-world models, this requires data sets of 3D models that are orders of magnitudes larger than our current data set.

There are multiple opportunities to curate or generate functionality information for 3D models. Makers could supply better labels by supplying well-documented source files for their meshes, but this requires a dramatic shift in the practices of these communities. More models are shared every day and new sub-domains of making are emerging with additional labels (e.g., clinical reviews on the NIH 3D print exchange). Alternatively, the creation of datasets for 3D printing and release of novel approaches to 3D model generation presents an opportunity to use latent representations of 3D models to generate meta-data for functionality.

CONCLUSION

In this paper, we propose a new approach to functionality-aware segmentation and classification of 3D models for 3D printing that allows users to modify and stylize 3D models while preserving their functionality. This method relies on an insight gained from a formative study of 3D models sourced from Thingiverse: that functionality can be defined by external and internal contexts. We present our segmentation and classification method and evaluate it using functionality-labeled models from our noisy data set of real-world Thingiverse models. We evaluate the utility of functionality in the context of selective styling of 3D models by building the Style2Fab interface and evaluating it with 8 users. This work speaks more broadly to the goal of working with generative models to produce functional physical objects and empowering users to explore digital design and fabrication.