Publication

Dishita Turakhia, Yini Qi, Lotta-Gili Blumberg, Andrew Wong, Stefanie Mueller.

Can Physical Tools that Auto-adapt its Shape based on Learner's Performance Help in Motor Skill Training?

In Proceedings of

TEI ’21.

DOI PDF Video Talk Slides Video Press

DOI PDF Video Talk Slides Video Press

- MIT News

- Digital Trends

- ACM News

- Innovation Toronto

- Science Wiki

- TechXplore

- Interesting Engineering

- News Atlas

- Northern Territory Technology News (Australia)

- News8Plus

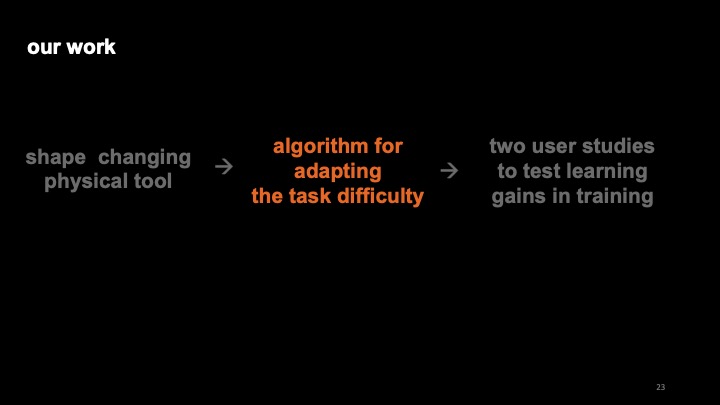

Can Physical Tools that Adapt their Shape based on a Learner's Performance Help in Motor Skill Training?

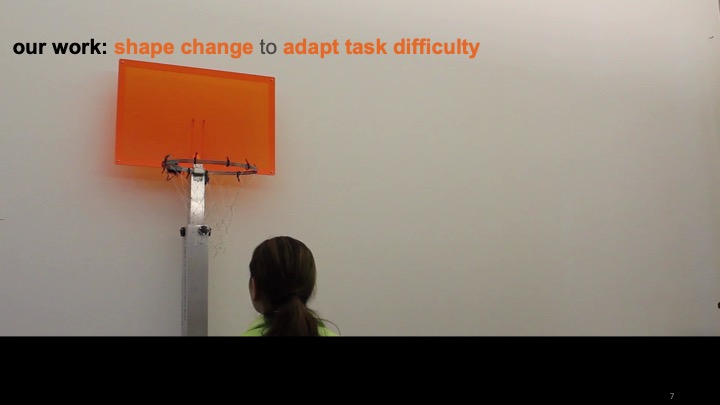

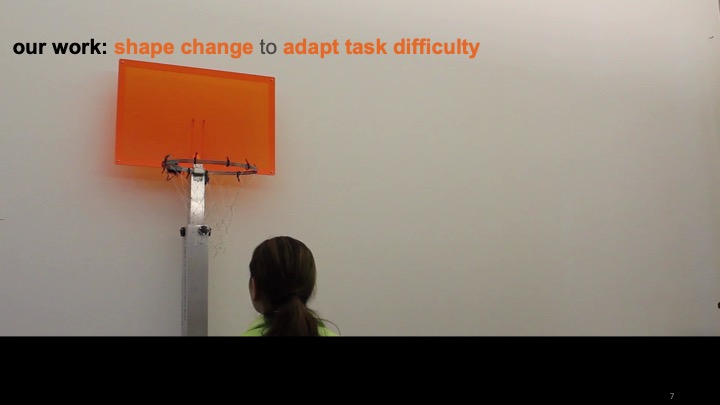

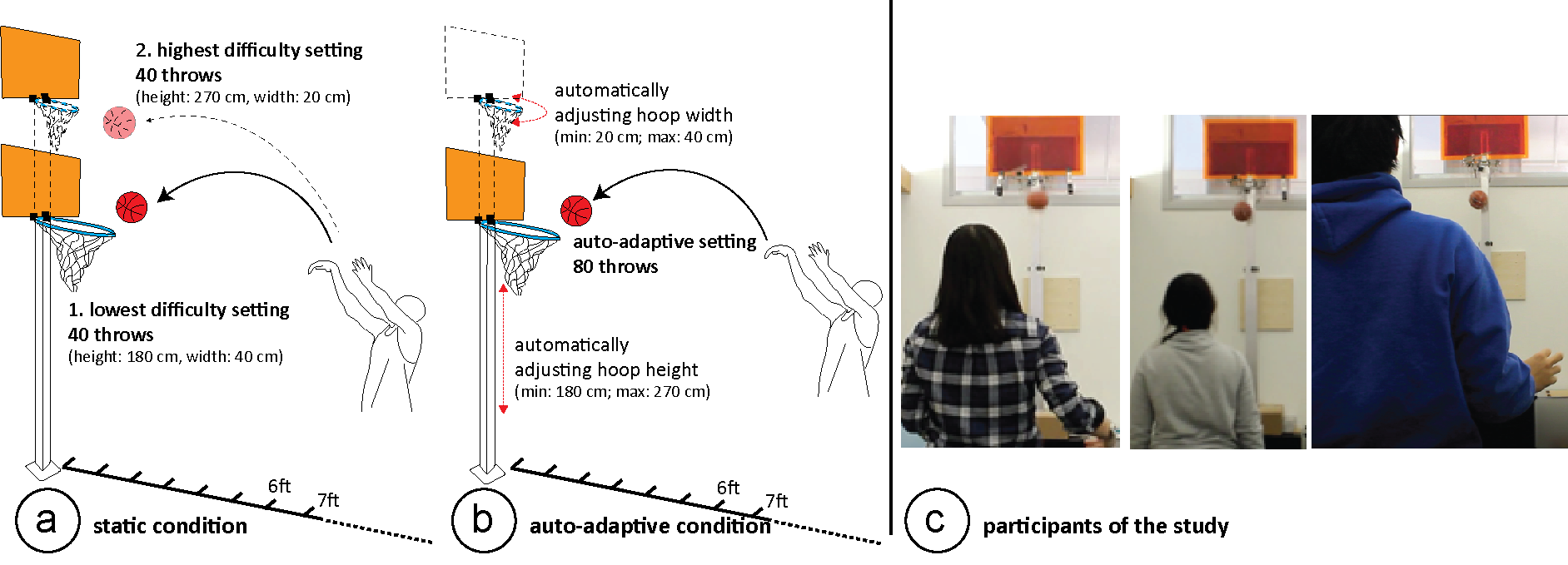

Figure 1. We investigate if adaptive learning tools that automatically adapt their shape to adjust the task difficulty based on a learner's performance can help in motor-skill training. To this end, we built (a) a study prototype in the form of an adaptive basketball stand that can adjust its hoop size and basket height. Our studies show that when the tool adapts automatically, training leads to significantly higher learning gains in comparison to training with (b) a static tool and (c) a manually adaptive tool for which the learners choose the difficulty level themselves.

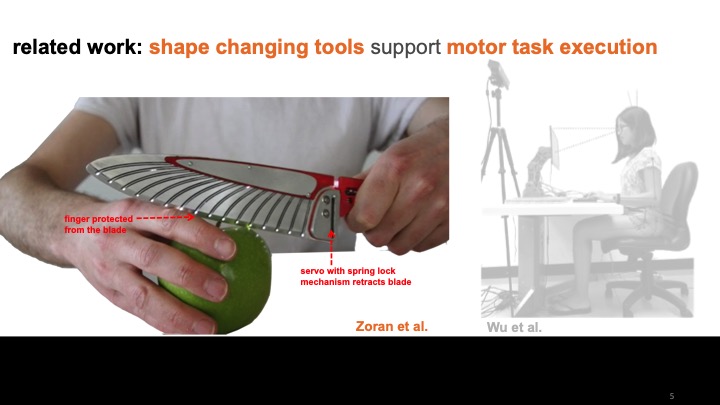

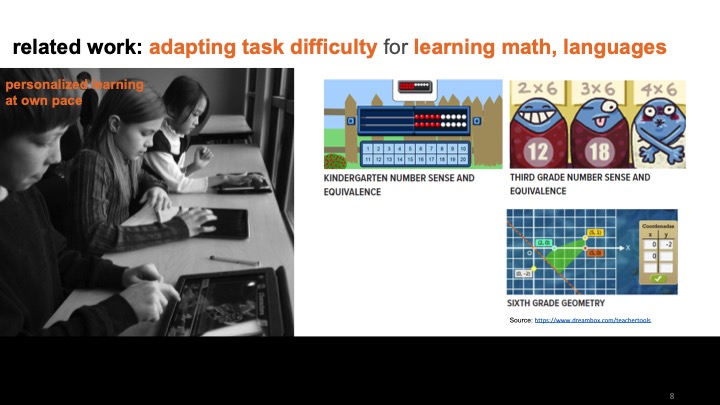

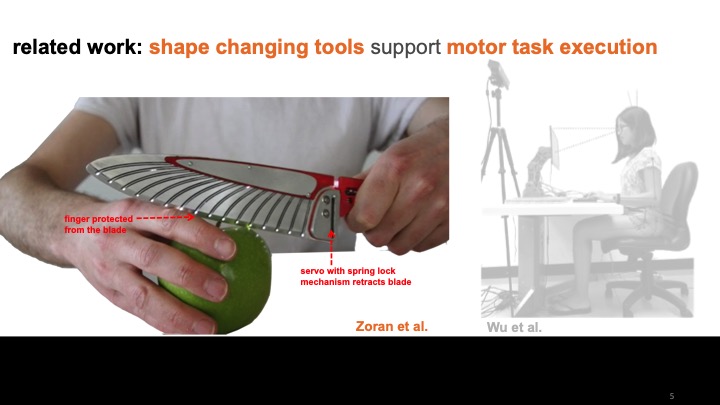

Adaptive tools that can change their shape to support users with motor tasks have been used in a variety of applications, such as to improve ergonomics and support muscle memory. In this paper, we investigate whether shape-adapting tools can also help in motor skill training. In contrast to static training tools that maintain task difficulty at a fixed level during training, shape-adapting tools can vary task difficulty and thus keep learners' training at the optimal challenge point, where the task is neither too easy, nor too difficult.

To investigate whether shape adaptation helps in motor skill training, we built a study prototype in the form of an adaptive basketball stand that works in three conditions: (1) static, (2) manually adaptive, and (3) auto-adaptive. For the auto-adaptive condition, the tool adapts to train learners at the optimal challenge point where the task is neither too easy nor too difficult. Results from our two user studies show that training in the auto-adaptive condition leads to statistically significant learning gains when compared to the static (F_(1,11) = 1.856, p<0.05) and manually adaptive conditions (F_(1,11) = 2.386, p < 0.05).

Introduction

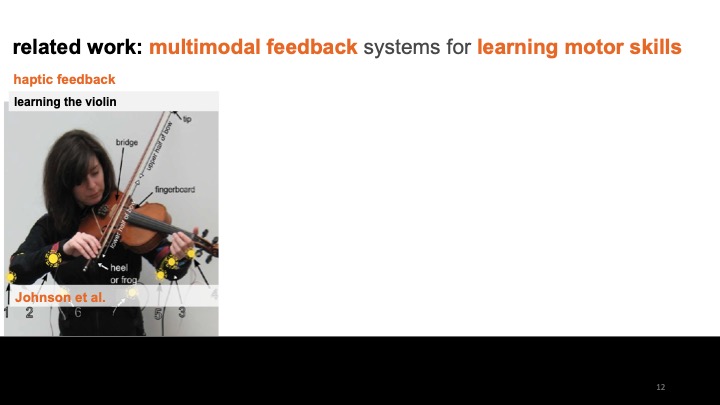

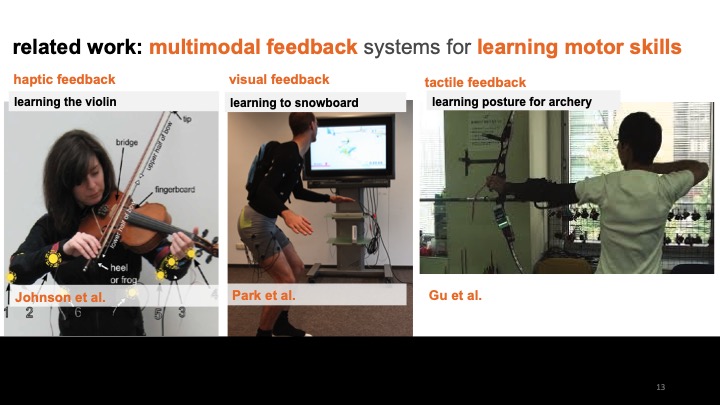

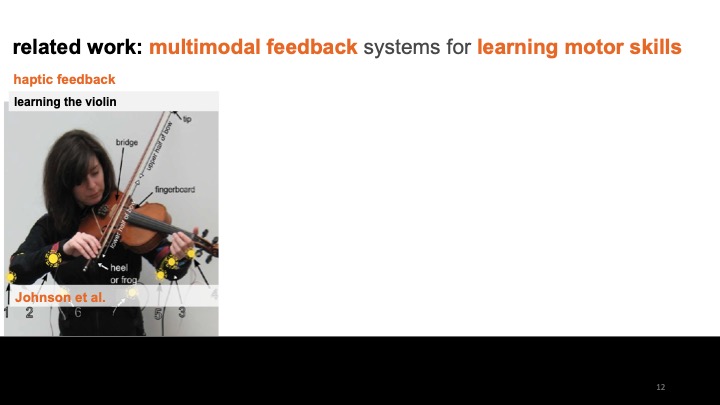

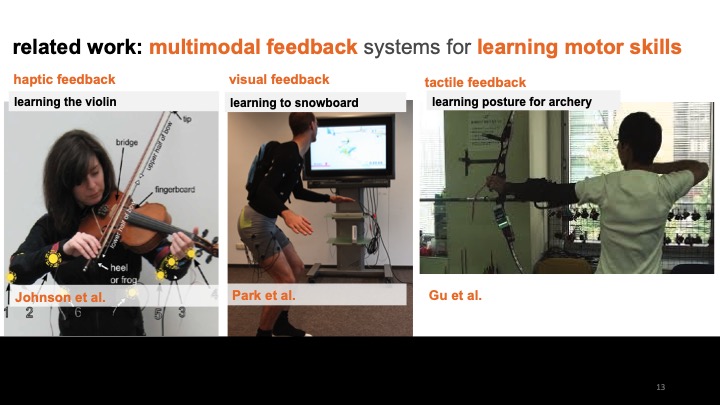

Research in HCI has shown that shape-adapting tools can support users in the execution of motor tasks by either helping users to perform the task correctly (e.g., actuated office furniture to improve ergonomics). However, it is unclear if such automatically shape-adapting tools can also help train users for the motor skills so that after fully acquiring the skill, users no longer need additional support when performing the motor task.

In our work, we investigate if physical tools that automatically adapt their shape can help users in motor skill training. In particular, we study how training with an automatically adaptive training tool compares against the current methods of training, which either use static or manually adaptive tools.

To study this, we built a study prototype of a basketball stand with adjustable hoop height and width that works under three training conditions:

- static: the basketball stand is at a fixed hoop height and width, and thus the difficulty level does not change over time.

- manually adaptive: users can adjust the difficulty level themselves by controlling the hoop height and width by activating the actuators (motors) on the stand.

- auto-adaptive: the tool automatically varies the difficulty level by adjusting the hoop height and width based on the user's performance that is detected using sensors, such as a switch on the net and a piezo sensor on the board.

To determine when to adapt the study prototype in the auto-adaptive training condition, we use an adaptation algorithm that maintains the training difficulty at the optimal challenge point, at which the task is neither too difficult nor too easy for the users. Studies in motor skill learning have shown that when coaches train learners at the optimal challenge point, learners have the maximum potential learning benefit. To maintain the difficulty level at the optimal challenge point, the algorithm measures a users' performance and based on the performance over time, determines whether the tool should adapt to a more difficult setting, a less difficult setting, or remain at the current difficulty setting during the training.

To test if training in the auto-adaptive condition, leads to higher learning gains when compared to the static and manually adaptive condition, we conducted two user studies with 12 participants each. We then measured the participants' learning gains (i.e., increase in performance scores) after (1) training on the automatically adaptive tool versus the static training tool, and (2) training on the tool versus the manually adaptive training tool.

Our study results show significant learning gains in the auto-adaptive training condition when compared to the static (F_(1,11) = 1.856, p < 0.05) and manually adaptive conditions (F_(1,11) = 2.386, p < 0.05). For manually adaptive condition, we found that there was a mismatch between participants' skill levels and their own assessment of what task difficulty is best to train on, resulting in only small learning gains.

Qualitative feedback from studies showed that users preferred training with auto-adaptive tools over training with static and manually adaptive tools. For instance, P11 in user study 1 said, "adaptive training makes each stage of the training experience more rewarding, so it helped me focus better", and P3 in user study 2 said, "auto-adaptive was easier to use; I didn't have to think about if I set it too easy or too hard".

We thus see a large potential for the use of automatically adaptive training tools in motor skill training, making personalized training accessible to a larger audience that may not have access to an expert trainer.

In summary, we contribute:

- A study prototype of an adaptive basketball hoop that enables training in three conditions: (1) static, (2) manually adaptive, and (3) auto-adaptive condition. In auto-adaptive condition, the physical tool automatically adapts to vary the task difficulty based on the learner's performance, so that the task difficulty is at the optimal challenge point for the learner.

- A study with 12 participants measuring the learning gains of training in the auto-adaptive training condition versus the static training condition with results showing significantly higher learning gains in the auto-adaptive condition (F_(1,11) = 1.856, p < 0.05).

- A study with 12 participants measuring the learning gains of training in the auto-adaptive training condition versus the manually adaptive training condition with results showing significantly higher learning gains in the auto-adaptive condition (F_(1,11) = 2.386, p < 0.05).

BACKGROUND ON VARYING TASK DIFFICULTY

To determine when to adapt the tool so that the task difficulty level accommodates the learner's increase in skill level, we train the learners at optimal challenge point, which has been proven to lead to higher learning gains. Here, we describe how we translate this aprroach into the design of our tool.

Task Difficulty

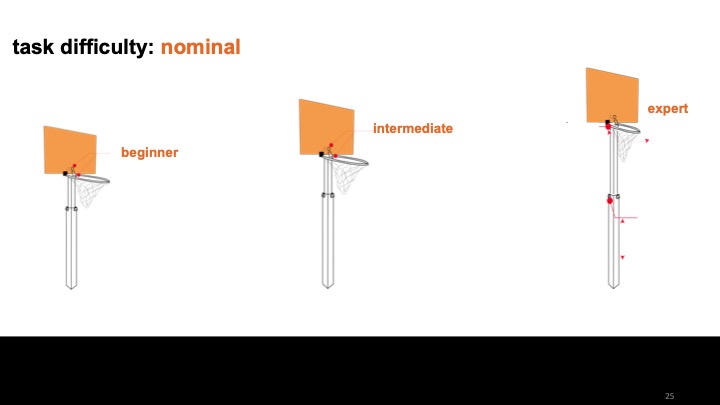

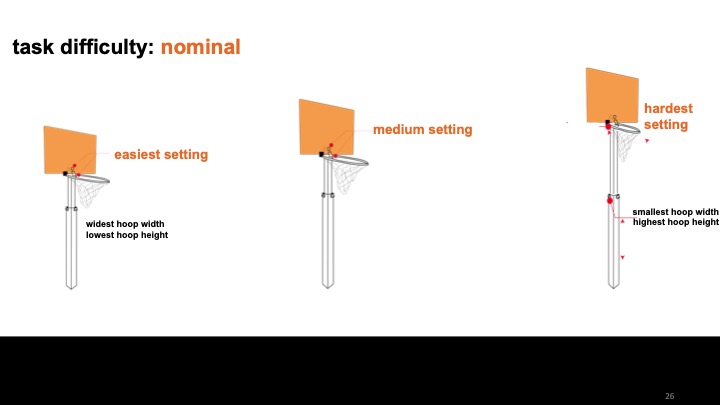

To understand how the task difficulty during training affects the learning of a motor skill, researchers have defined two types of task difficulties: nominal task difficulty and functional task difficulty [Guadagnoli et. al].

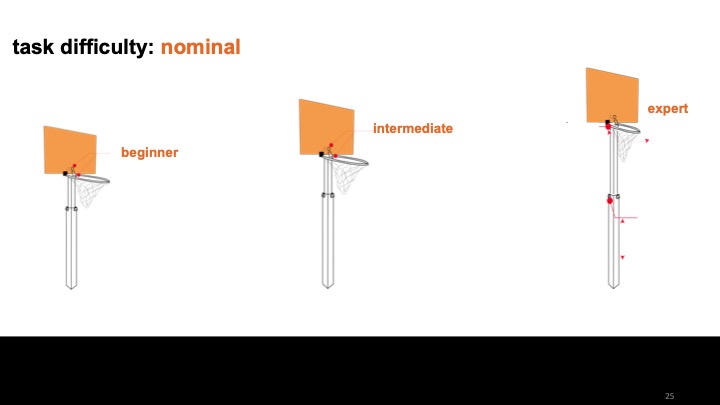

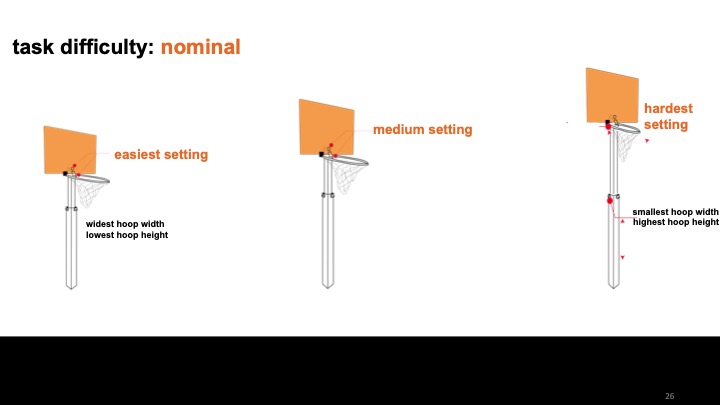

Nominal Task Difficulty (Independent of the Learner)

Nominal task difficulty is the level of difficulty of the task independent of the person executing the task and their skill level. For example, in basketball, it is more difficult to score a basket that is mounted at a higher height and has a smaller width, than to score a basket mounted at a lower height and has a larger width, irrespective of the person performing the task.

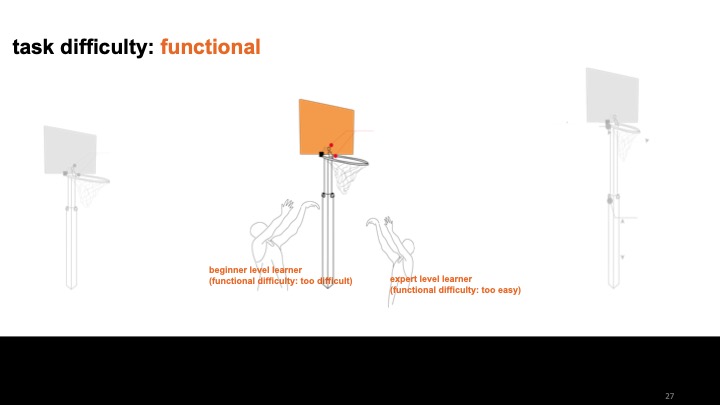

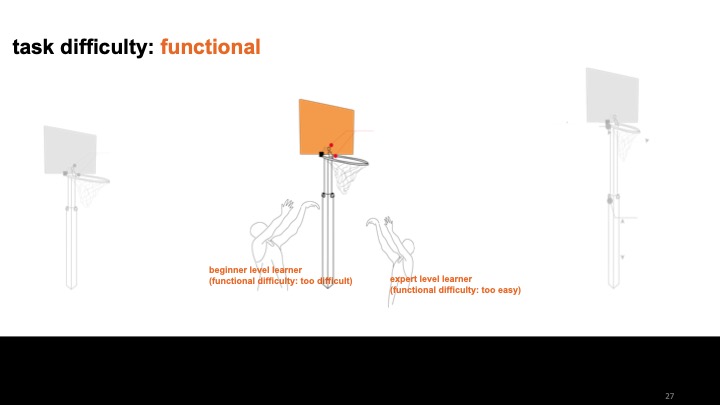

Functional Task Difficulty (Dependent on the Skill Level of the Learner)

Functional task difficulty refers to how challenging the task is in relation to the person executing the task and their skill level. For instance, when throwing a ball at a high basket, the task will typically be more difficult for a beginner with a low skill level than an expert with a high skill level.

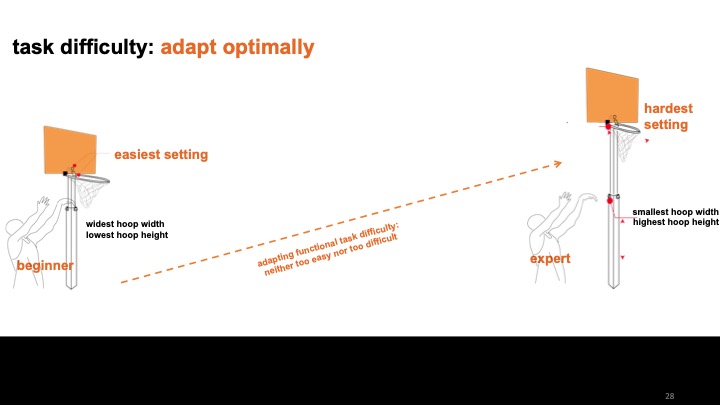

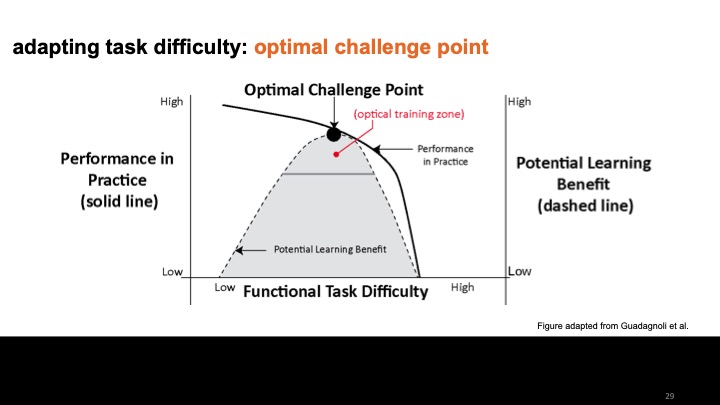

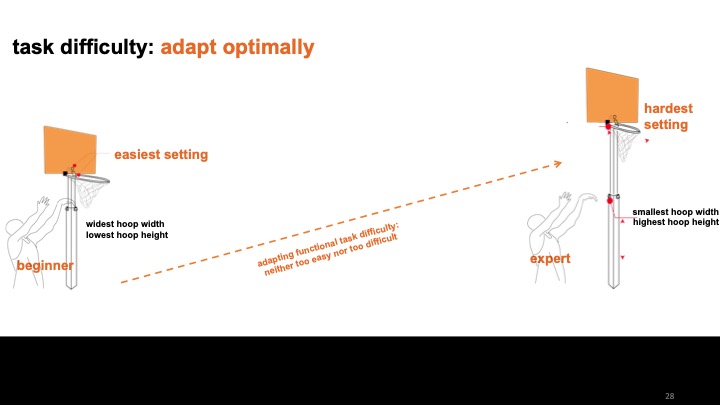

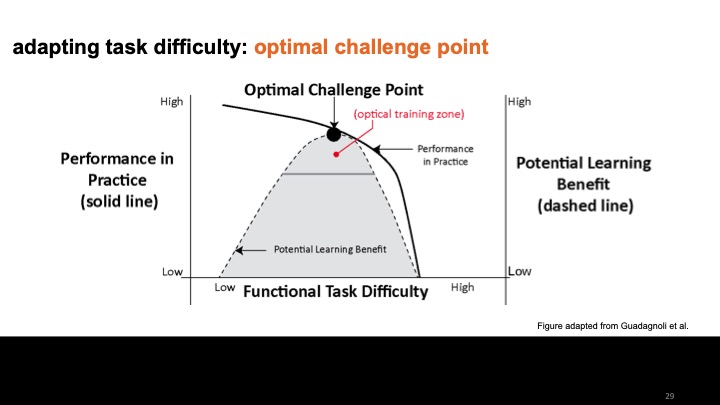

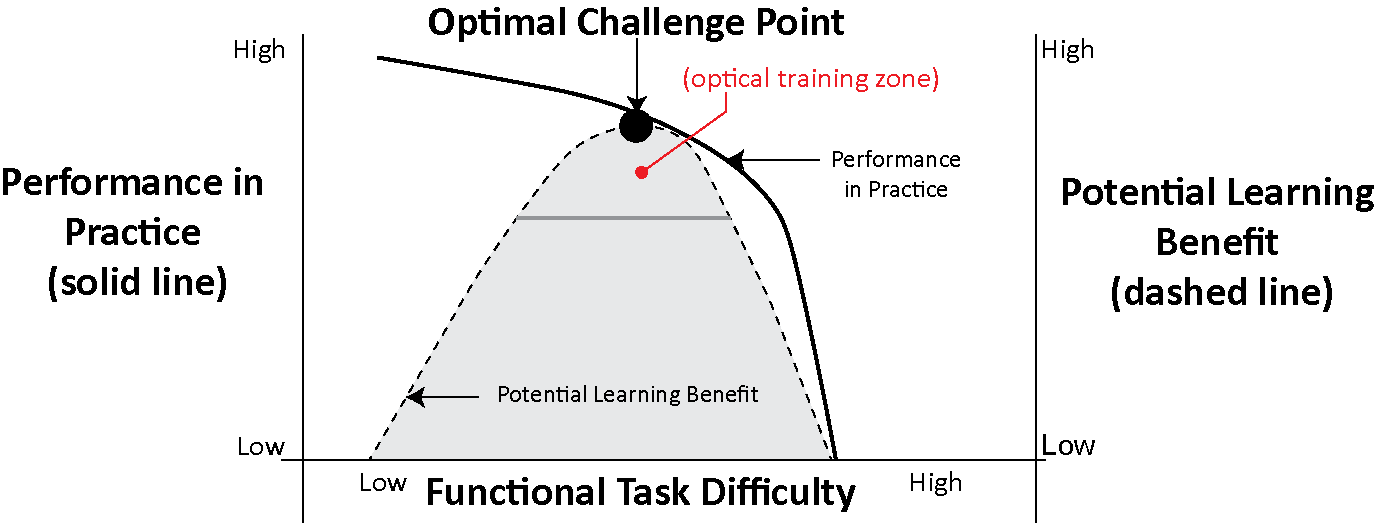

Optimal Challenge Point

Research has shown that when the functional task difficulty is at a level that is neither too difficult nor too easy for the learner, it results in the highest potential learning benefit [guadagnoli et. al]. This level of functional task difficulty is called the optimal challenge point. Figure 2 illustrates that when the functional task difficulty is too low, i.e. the task is too easy, the learner's performance in practice is high (e.g., every single basket is scored in basketball), but the learner remains underchallenged and the potential learning benefit is low. Conversely, when the functional task difficulty is too high, i.e., the task is too difficult, the learner's performance in practice is low (e.g., no basket is scored), and the learner is overchallenged and thus this setup also fails to maximize the potential learning benefit.

Therefore, to have the highest potential learning benefit, the functional task difficulty needs to be at a medium level, i.e., the task at hand should neither be too difficult nor too easy, which is reflected in a medium performance level in practice (e.g., some baskets are scored but not all).

Figure 2. The optimal challenge point is the level of functional task difficulty at which the task is neither too hard nor too easy, which allows for the largest potential learning benefit. Figure adapted from Guadagnoli et al.

Adjusting Task Difficulty to Maintain the Optimal Challenge Point

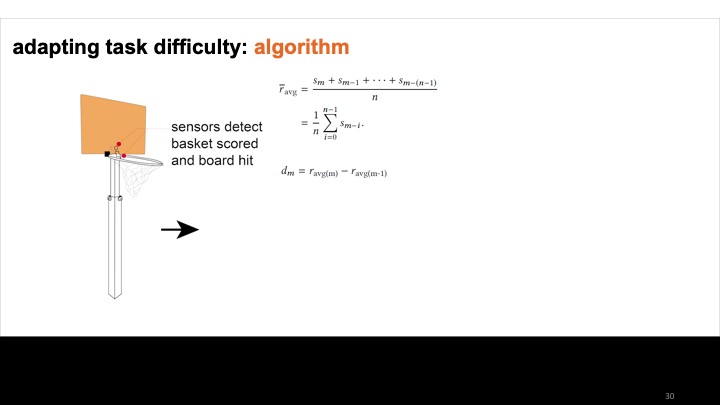

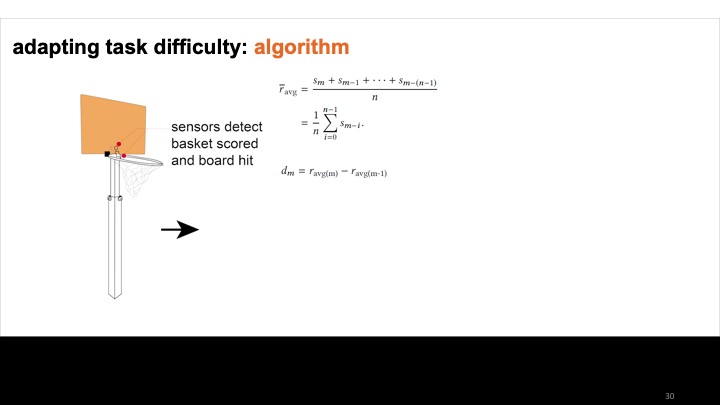

We developed an algorithm that allows learners to progress from a beginner level (low nominal task difficulty) to expert level (high nominal task difficulty) by training at a functional task difficulty set at the optimal challenge point at all times. The algorithm accomplishes this in following steps:

- Step 1. Monitoring Performance in Practice / Functional Task Difficulty: The algorithm monitors the learner's performance by collecting data from the integrated sensors over time and computes the learner's average score over time. Based on the score, we determine if the difficulty level is too difficult or too easy, or optimal.

- Step 2. Increase Nominal Task Difficulty by Adapting the Tool: If the score is high (functional task difficulty too low), our algorithm increases the nominal task difficulty by adjusting the tool. In basketball, for example, the algorithm can increase nominal task difficulty by raising the stand and making the hoop smaller.

- Repeat step 1 + 2 Until Highest Nominal Task Difficulty is reached (Expert Setting): Our algorithm repeats step 1 and step 2, i.e. monitoring performance in practice and increasing the functional task difficulty by adapting the tool, until the highest nominal task difficulty setting is reached. If the functional task difficulty is low at the highest nominal task difficulty, i.e. if the performance is high in the expert setting, the learner has fully mastered the skill.

STUDY PROTOTYPE

While a wide range of skills can be used to study motor skill learning, such as learning to ride a bike or to skateboard, we chose basketball as an example because the task of shooting balls into the hoop requires only a short amount of time (each shot takes around 3-4 seconds). This allows us to collect more data points in our user studies (220 shots per participant within an hour of study).

Design of the Study Prototype

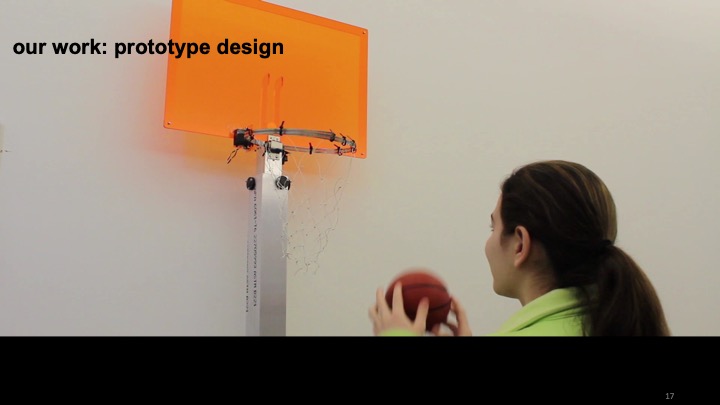

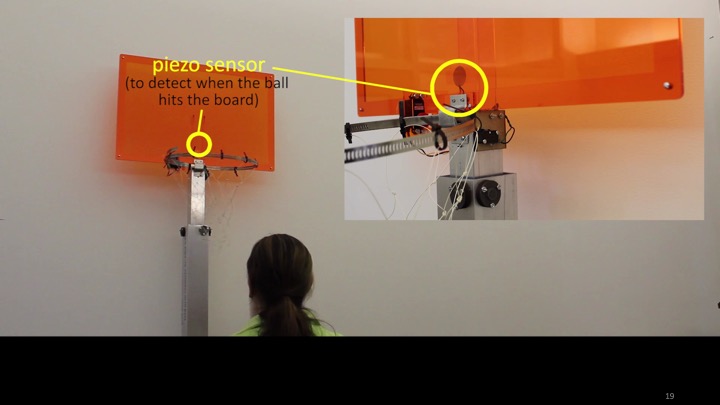

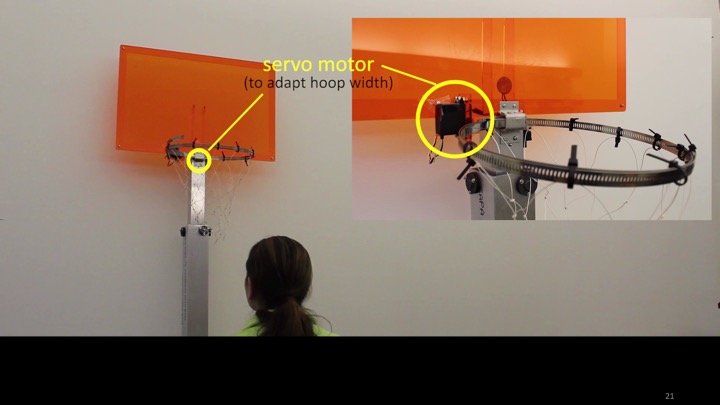

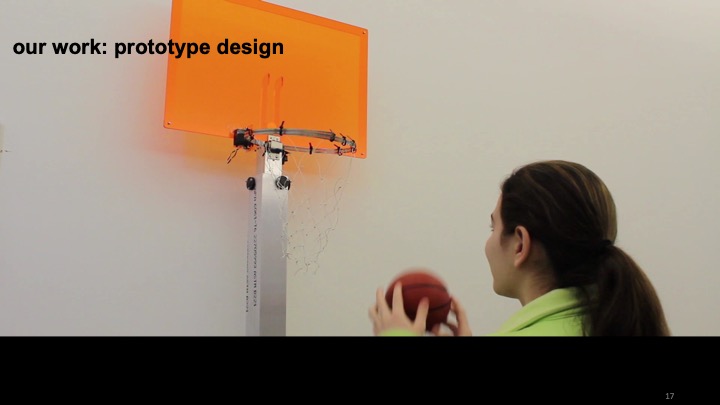

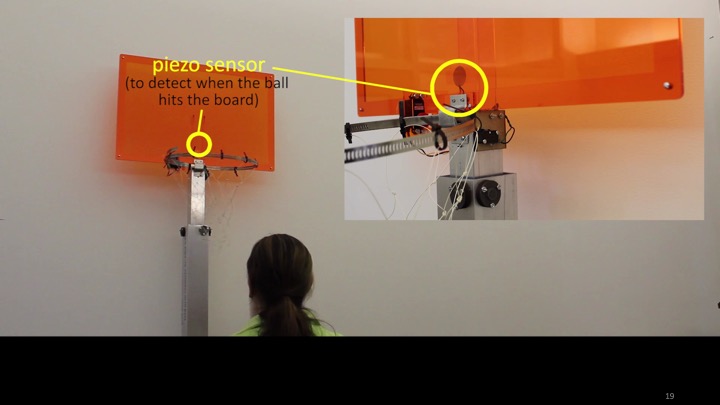

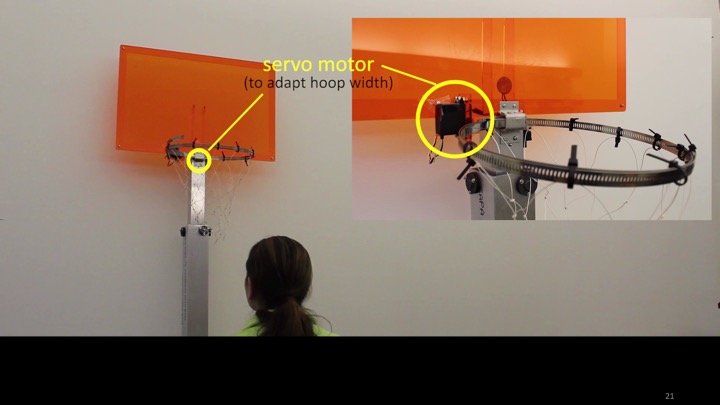

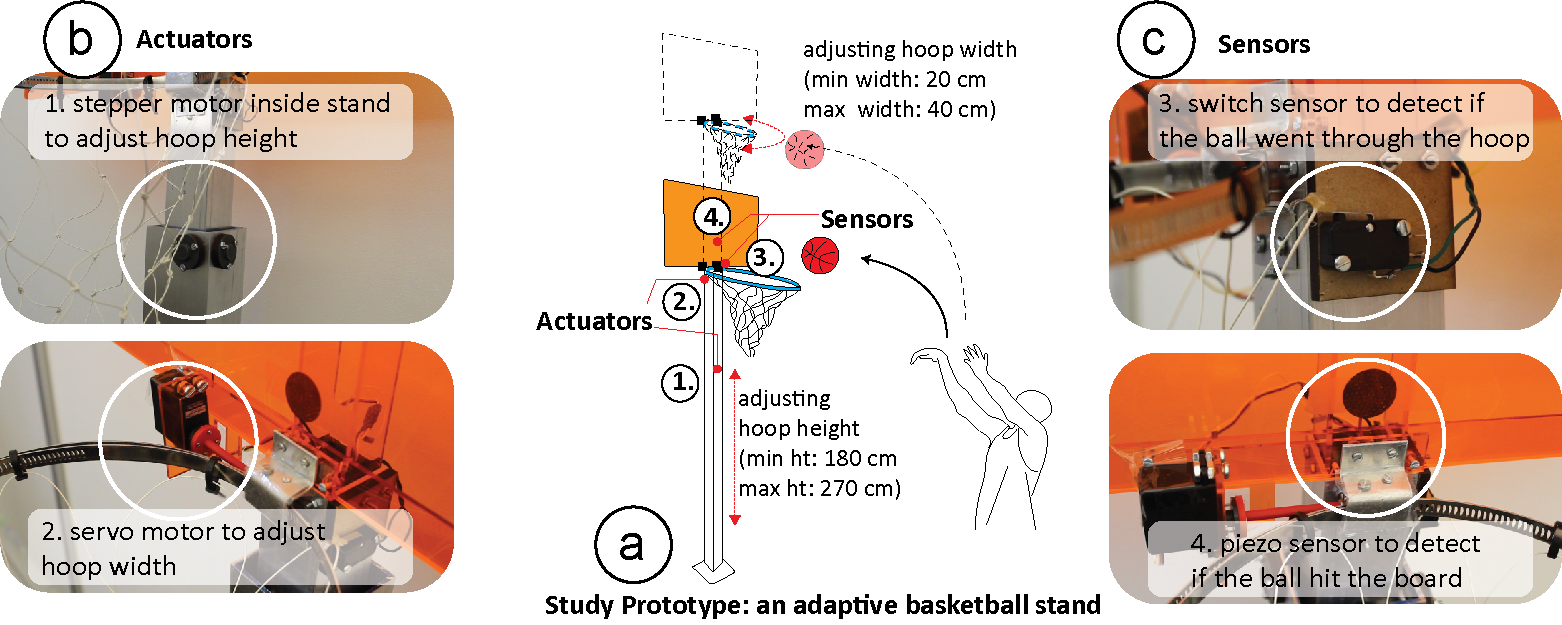

Figure 3a shows the design of the final study prototype of a basketball stand that we used for the three study conditions. The study prototype has an adjustable hoop height and width, i.e., the hoop can widen and tighten and the stand can raise or lower the hoop. It is mounted with: (1) actuators to adjust the hoop height and width (Figure 3b), and (2) sensors to detect when the ball hits the board or goes through the hoop (Figure 3c).

Figure 3. Study Prototype: (a) Our basketball stand with adjustable hoop height (min height: 180 cm, max height: 270 cm) and hoop width (min width: 20cm, max width: 40cm) is mounted with (b) actuators, i.e. a stepper and a servo motor for adaptation, and (c) sensors, i.e. a switch and a piezo sensor to detect if the ball went through the hoop or hit the board.

Actuators

To adapt the hoop height, we use a stepper motor (Figure 3b top, Figure 4 left) integrated with the base of the stand that lowers or raises the hoop. Similarly, to adapt the hoop width, we use a servo motor (Figure 3b bottom, Figure 4 right) integrated with the hoop that increases or decreases the hoop diameter. The basketball height and width can be adjusted continuously between a height of 180-270 cm and a hoop diameter of 20-40 cm, respectively. Thus, the lowest difficulty setting is with 180 cm hoop height and 40 cm hoop width, and the highest difficulty setting is 270 cm hoop height and 20 cm hoop width. For reference, the ball used for the user study had a diameter of 15 cm.

Figure 4. Actuators: (left) a stepper motor for adaptation of hoop height (right) a servo motor for adaptation of hoop width.

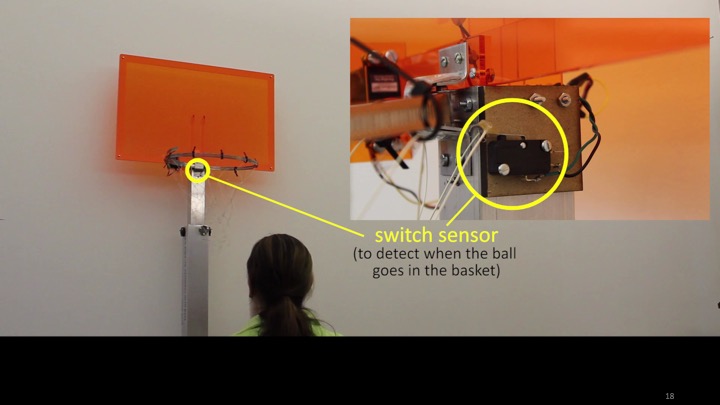

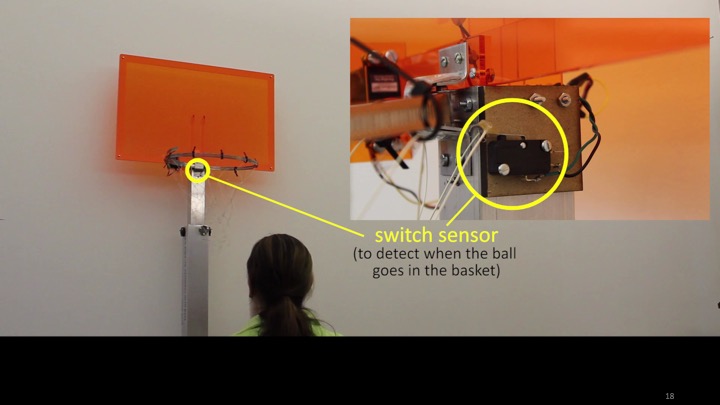

Sensors

We sense the learner's performance by detecting if the ball went through the hoop, hit the board, or completely missed. To sense if the ball went through the hoop, we use a switch sensor attached to the net of the hoop that gets activated when the ball goes through the hoop and pulls on the net (Figure 3c top, Figure 5 left). To detect if the ball hit the board, we use a piezo sensor (SEN-10293 from Sparkfun) that detects the noise of the ball hitting the board, which is a reading above 100 units (Figure 3c bottom, Figure 5 right). If neither the switch is activated nor the piezo reading is above 100 units, we infer that the ball completely missed the basket.

Using these sensors, our prototype can sense three performance outcomes, which are in order from most to least successful: basket scored, board hit, and completely missed.

Figure 5. Sensors: (left) a switch sensor to detect if the ball went through the hoop (right) a piezo sensor to detect if the ball hit the board

Using the Study Prototype for all three Study Conditions

Next, we explain the three study conditions and describe how our study prototype supports each of these conditions:

- In the static condition, the difficulty level is fixed and thus the prototype does not adapt during training. Therefore, no actuation of the tool or sensing of the user's performance is needed.

- In the manually adaptive condition, participants are in control of which difficulty level they want to train on. We provide participants with a keyboard to control the actuators to adjust the hoop width and the hoop height as desired.

- For the auto-adaptive condition, our adaptation algorithm controls the difficulty level. To provide the adaptation algorithm with data on the user's performance, the switch and piezo sensors embedded in the study prototype are used to determine if the ball missed the hoop, hit the board, or successfully went through the hoop. Based on the performance data, our algorithm then determines if the actuators need to adapt the tool to the next difficulty level.

Figure 6. Our study prototype support three training conditions: (a) static (b) manually-adaptive (particiapnts manually adapt the difficulty). (c) (b) auto-adaptive (algorithm automatically adapt the difficulty).

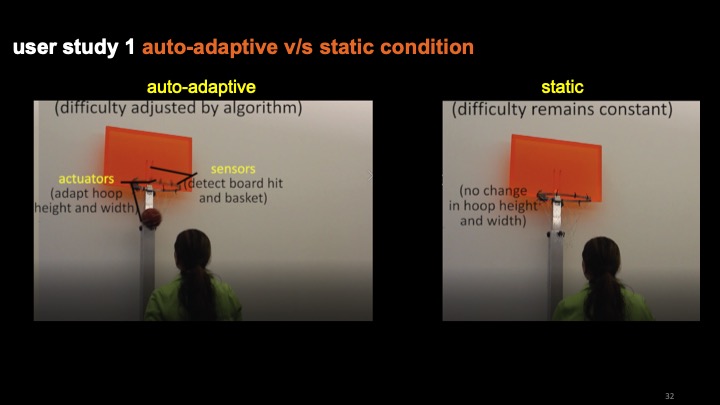

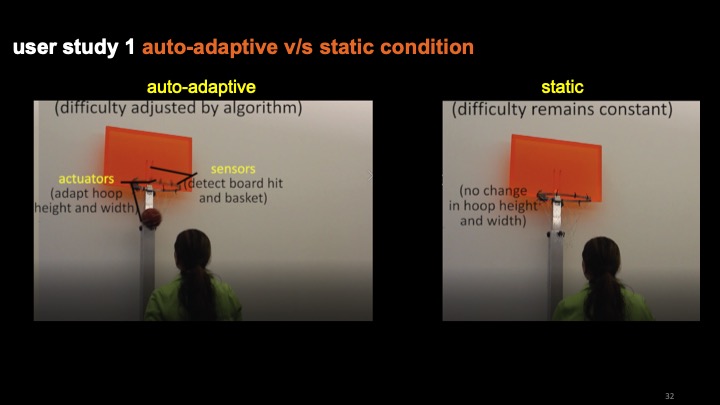

USER STUDY 1: STATIC V/S AUTO-ADAPTIVE TRAINING TOOLS

Study Design

In our first user study, we compare the learning gain and training experience of training with a static tool versus an automatically-adaptive tool. We base our user study on a previous study in motor skill learning that investigated fixed practice versus varied practice in which a personal trainer adjusted the training, and showed that varied practice leads to a larger learning gain [Kerr et. al].

Figure 7. The setup for user-study 1 with two training conditions: (a) static (80 throws: 40 lowest difficulty, 40 highest difficulty setting) and (b) auto-adaptive (80 throws continuously adapting difficulty). (c) The participants of our study seen during training were standing at distances based on a measurement of their initial performance.

Hypothesis: Our hypothesis was that training with an auto-adaptive tool, which adapts the difficulty level based on the learner's performance, will result in a higher learning gain when compared to a static tool that does not vary the task difficulty based on learner's performance during the training. A higher learning gain will be reflected in a higher performance score after the same amount of training time in each condition.

Participants: We recruited 12 participants (8 female, 4 male) aged between 18-31 years (mean=25, std. deviation=3.3).

Setup: Participants were asked to stand at a single location and attempt the throws for the entire study from this position. We used our study prototype without any adaptation in the static condition. For the auto-adaptive condition, we deployed our algorithm on the microcontroller integrated with the basketball stand.

Conditions: The study followed a within-subjects design. We used randomized order to avoid order effects for training the participants on the following two conditions:

- Static training: In the static training condition, participants attempted half (40) the throws in the lowest difficulty setting (i.e., largest hoop, lowest basket height) and the second half (40) of throws in the highest difficulty setting (i.e.,the smallest hoop and the highest basket height).

- Auto-adaptive training: In the auto-adaptive training condition, participants started by throwing balls in the lowest difficulty setting (lowest basket height and largest hoop size) and gradually progressed towards the harder settings using the adaptation algorithm shown in Table 1 (of the paper) as they performed a total of 80 throws.

Study Procedure

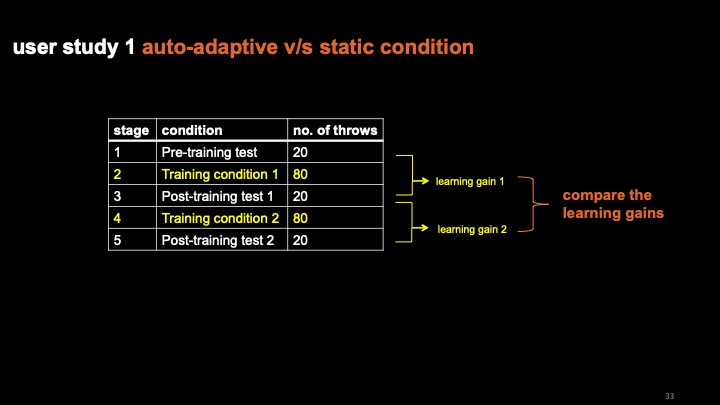

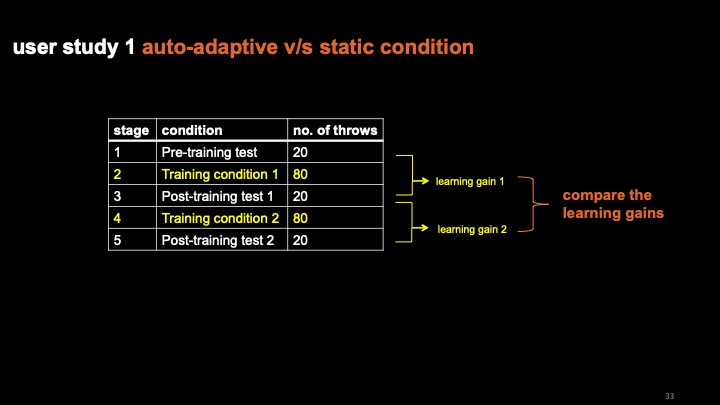

In total, each participant attempted 220 throws over the 5 phases of the study. For training condition #1, the participants were randomly assigned either static training or auto-adaptive training first, and then the respective other training in condition #2. Please refer to the paper for more details.

Measuring Learning Gain: To measure the learning gains for the first condition participants trained in, we compared the participant's score in the pre-training skill assessment test and their score in post-training test #1. To measure the learning gains of the second condition participants trained in, we compared their score in post-training test #1 and their score in post-training test #2. The learning gains were measured by calculating the differences in the respective test scores.

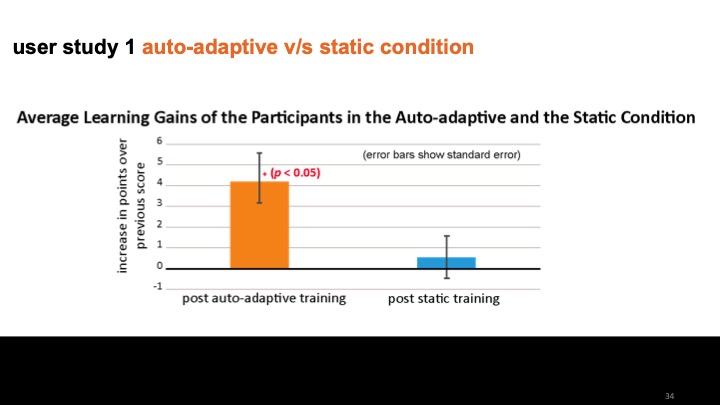

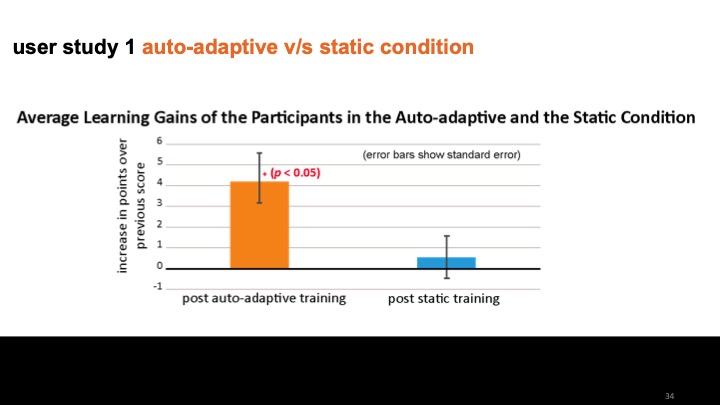

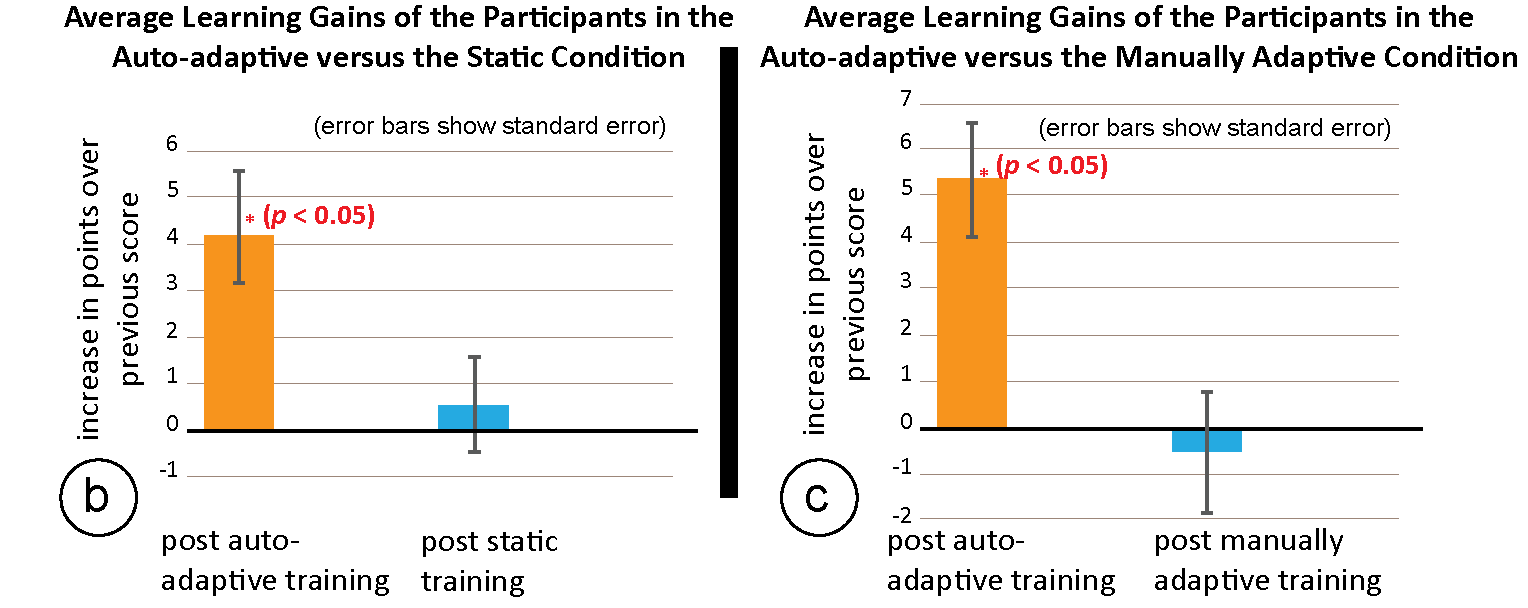

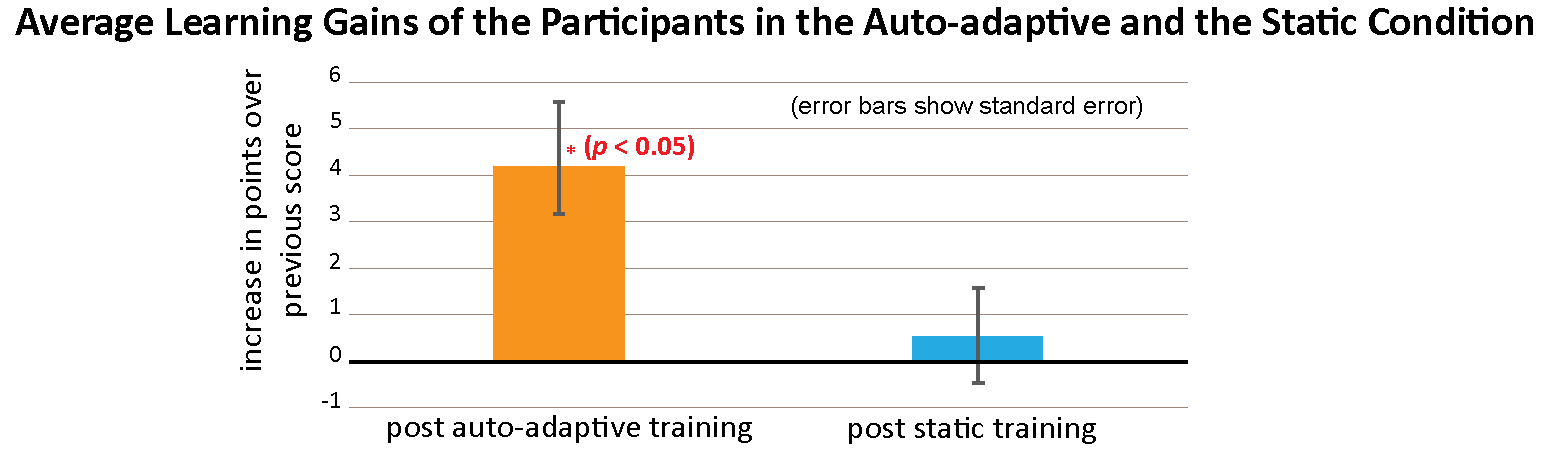

Study Results: Average Learning Gain

We calculated the average learning gains for both the conditions and found that the training in the auto-adaptive condition led to a 20% increase in the average performance score compared to the static training condition. As seen in Figure 8, participants scored a total of 4 points more on average during their 20 attempted throws in the post auto-adaptive training test. (With maximum 1pt per attempt, the maximum possible score was 20 points in each post-training test). ANOVA analysis showed that the increase in the learning gains in the auto-adaptive condition was statistically significant (F_(1,11) = 1.856, p < 0.05).

Figure 8. User-study 1: Average learning gain post adaptive training and post non-adaptive training. The average learning gain post adaptive training is significantly higher than performance post static training (F_(1,11) = 1.856, p < 0.05).

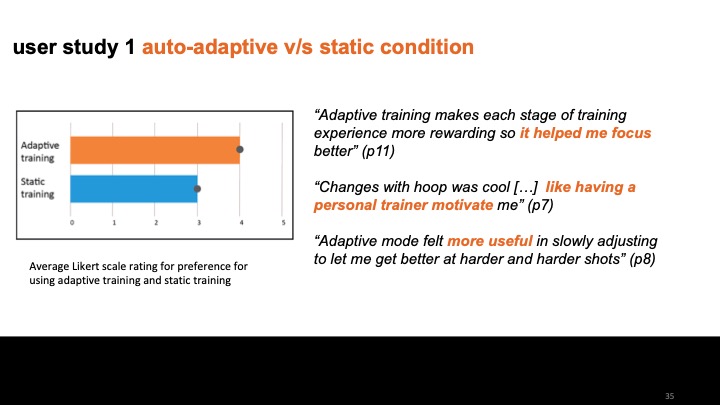

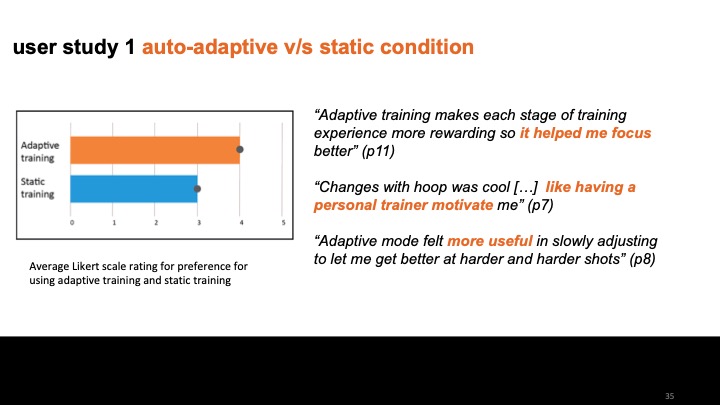

Qualitative feedback When participants were asked if they would rather retrain with the auto-adaptive or the static condition, 8 out of 12 participants preferred the auto-adaptive condition. When asked about the reasons for their preferences, participants stated the following:

Seeing the physical tool adapt: "The adaptive training made me reflect upon what I'm doing wrong by physically changing the height and hoop size and made me want to change my throwing style faster." - (p3), "Adaptive training makes each stage of the training experience more rewarding, so it helped me focus better." - (p11)

Having adaptive difficulty levels: "The adaptive mode felt more useful in slowly adjusting to let me get better at harder and harder shots" - (p8). "it's more natural! [...] it matches learning process better" - (p7). However, one participant also mentioned "I prefer to be challenged beyond my current capability and learn that way." - (p10)

To summarize the qualitative feedback, we discovered that learners find it motivating to see their progress through tool adaptation without having to focus on constantly assessing their own performance.

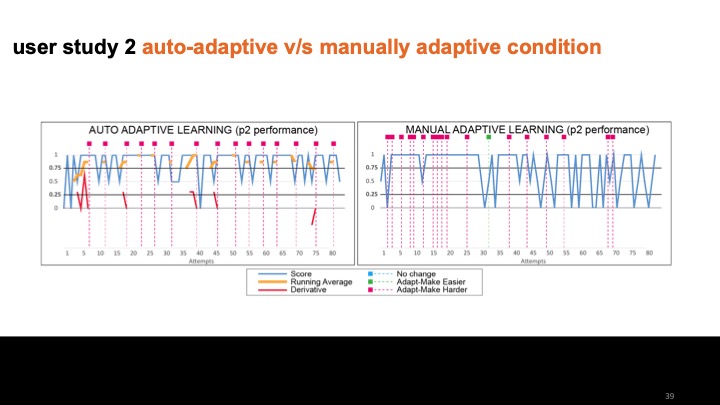

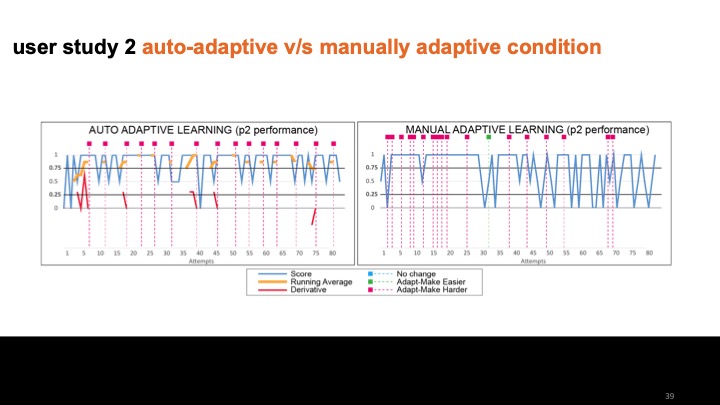

USER STUDY 2: MANUALLY ADAPTIVE V/S AUTO-ADAPTIVE TRAINING TOOLS

Study Design

For our second study, we investigated if automatically adapting the training tools also leads to higher learning gains when compared to manually adaptive training tools, i.e. when the learners have the choice to adjust the difficulty level according to their preference.

Hypothesis: We hypothesized that the training with an automatically-adaptive training tool will result in a higher learning gain when compared to a manually adaptive training tool.

Participants: We recruited 12 participants (8 females) aged between 19-28 years (mean=21, std. deviation = 2.62).

Setup: We used the same room setup and basketball prototype as in the prior user study. For the automatic adaptation, we deployed the adaptation algorithm on the micro-controller integrated with the basketball stand and used the auto-adaptation algorithm described in section 4.4. (of the paper) with a window size of 4 attempts. In the manually adaptive condition, participants controlled the adaptation, i.e. they could decide which difficulty level to train on and for how long to train in the setting.

Conditions: As in the previous study, this study followed a within-subjects design and randomized order to avoid order effects for training the participants on the following two conditions:

- Manually Adaptive training: For the manual adaptation, we added a keyboard interface, which participants used to adjust the basket height (arrow up/down) and hoop diameter (arrow left/right) according to their preference.

- Auto-adaptive training: In the auto-adaptive training condition, our adaptation algorithm automatically adjusted the tool during the training, as described in the section `setup' above.

Study Procedure

The study procedure was the same as in the first study (refer to section 5.1 of the paper), i.e. we started with a pre-study calibration of the distance to the basket, followed by 220 throws (pre-training skill assessment: 20 throws, training condition 1: 80 throws, post-training 1 test: 20 throws, training condition 2: 80 throws, and post-training 2 test: 20 throws).

Measuring Learning Gain: We measured learning gain in the same way as in the first study.

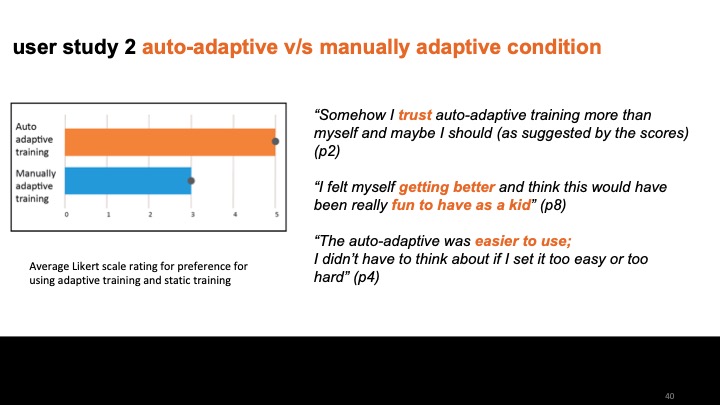

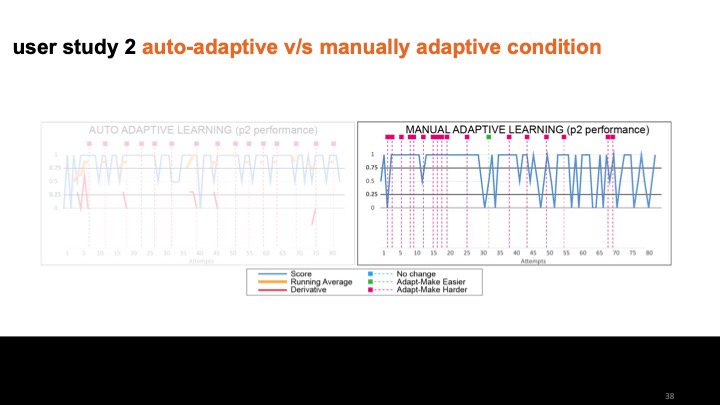

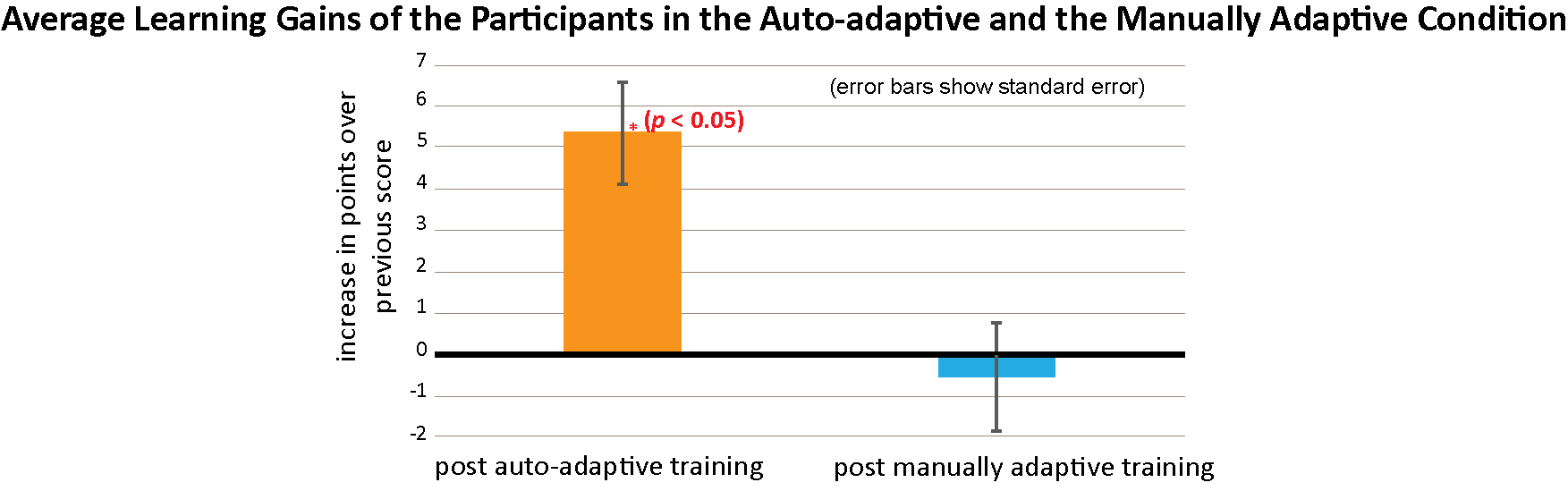

Study Results: Average Learning Gain

We calculated the average learning gains for both the conditions and found that the training in the auto-adaptive condition led to a 25% increase in the average performance score compared to the manually adaptive condition. As can be seen in Figure 8, participants scored 5 points higher on average during their 20 attempted throws in the post auto-adaptive training test (each basket hit = 1pt). ANOVA analysis showed that the increase in the learning gains in the auto-adaptive condition was statistically significant (F_(1,11) = 2.386, p < 0.05).

Figure 9. User-study 2: Average learning gain of post auto-adaptive and manually adaptive training. The average learning gain in performance post auto-adaptive training is significantly higher than the performance post manually adaptive training (F_(1,11) = 2.386, p < 0.05).

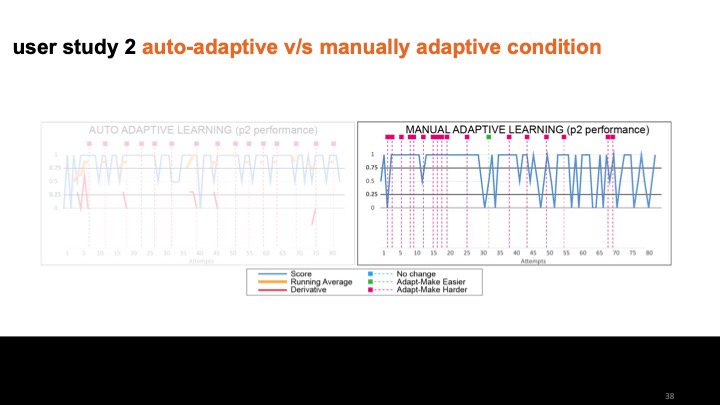

Maintaining Functional Task Difficulty Around the Optimal Challenge Point It was evident from the results of the manually adaptive condition that participants failed to determine the difficulty level that offers the largest learning gain when left to configure the hoop height and width themselves. See the paper for more detailed analysis od particpants' performances.

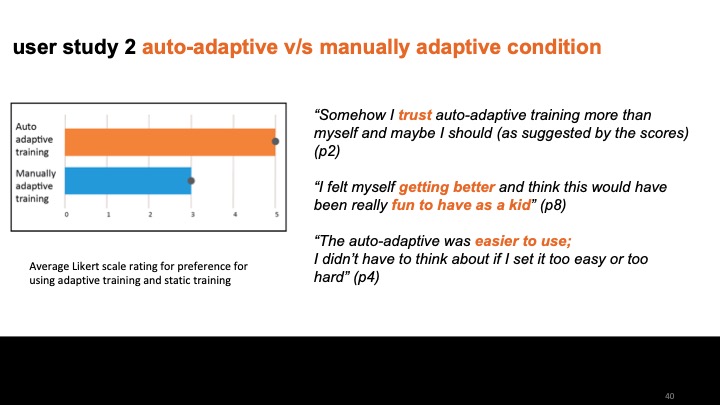

Qualitative feedback When participants were asked if they would rather retrain with the automatically-adaptive or manually adaptive condition, 10 out 12 participants preferred the automatically-adaptive setup. When asked to highlight the benefits and drawbacks of each condition, participants responded as follows:

Self-evaluation: "There were times during the manually adaptive process where I was unsure what the optimal path to training would be." - (p5), "Somehow I trust auto-adaptive training more than myself and maybe I should (as suggested by scores after each type of training received)." - (p2), "Auto-adaptive is nicer than manually adaptive when the difficulty is increased because it is difficult for me to gauge how much I should increase the difficulty." - (p7).

Learning of skill, ease and efficiency in training: "The auto-adaptive was easier to use; I didn't have to think about if I set it too easy or too hard." - (p3). "Manually adaptive training took longer/hard to judge which increments to practice/alter. It seemed the auto-adaptive training was more efficient." - (p10)

Motivation: "[auto-adaptive:] I felt myself getting better and think this would have been really fun to have as a kid." - (p8), "I feel that I am doing better every time it adapts to a harder setting." - (p12), "I have freedom to grow and make mistakes. No one is watching me (outside of lab setting) so it is more comfortable [than having a personal trainer]." - (p1).

During the study, we also observed that participants who were assigned to the automatically adaptive condition first used a similar strategy as our algorithm for their manually adaptive condition. In contrast, participants who trained on the manually adaptive condition first were fixated on the least difficult or the most difficult setup.

In summary, our results confirm that maintaining functional task difficulty around the level of the optimal challenge point is beneficial in the learning of motor skills. The feedback from participants showed that training on the automatically adaptive mode also provides additional motivation and is engaging and more enjoyable.

DISCUSSION

We showed that automatically adapting physical tools that vary task difficult are effective for motor skill learning as they maintain the training at the optimal challenge point for the learners. We now discuss benefits and limitations of our work.

Scaling Personalized Training of Motor Skills: One of the main motivations for building automatically-adaptive tools for motor skills learning is that they allow to scale-up personalized learning of motor skills and make it available to a larger audience that does not have access to personal trainers. While we did not compare our automatically-adaptive tool to learning with a personal trainer, we did provide evidence that our approach leads to significantly higher learning gains when compared to conventional training tools, such as static and manually adaptive tools, that are currently used by learners who do not have access to a personal trainer.

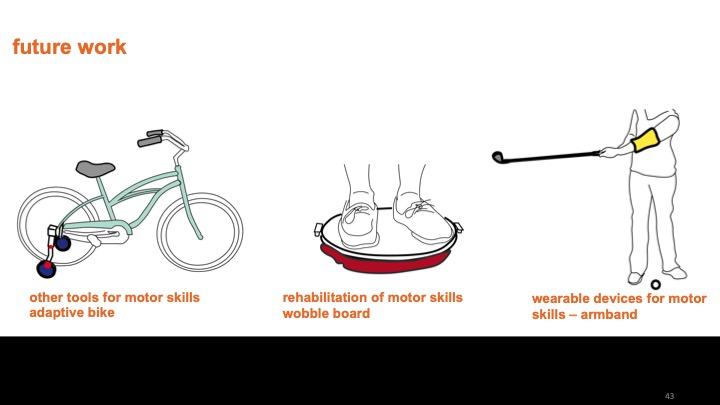

Extending to Other Motor Skills and Tools: While in this paper, we were only able to study one particular adaptive tool, our work can be replicated to study additional tools in the future. For instance, the adaptation algorithm generalizes across different types of tools as long as they have sensors to measure a user's performance and actuators to adapt the difficulty level of the tool. Similarly, while the hardware of our tool, i.e. the particular sensors and actuators, are necessarily specific, we outlined the general requirements that study prototypes need to fulfill to work across all three conditions. We hope that our work can lay the foundation for the study of a variety of adaptive training tools, which will allow researchers to gather more evidence of the effectiveness of adaptive training tools for motor skill training.

Longitudinal Studies with Diverse Participants: While the insights from our study are promising, more longitudinal studies with more varied populations are needed before we can draw conclusions on long-term learning gains. Our study focused on only one adaptive training tool and more studies are needed to confirm the results across a broader set of different tools in different application domains. Furthermore, additional studies need to be conducted to study specific aspects of the skills, such as variation in throwing angle and throwing distance.

Improvements for the Auto-Adaptation Algorithm: In our work, we discussed the discrete vs. running average approach and an additional derivative check to calculate the user's performance over time which we then used to determine when to adjust the difficulty level for training. However, several other approaches can be used to determine when to adapt the tool. For instance, by adding a hysteresis value to the algorithm, we can further enhance our algorithm to only adapt the tool when the performance is at a certain value for a long period of time. Such a hysteresis value can prevent unwanted frequent switching between states when performance fluctuates around the optimal challenge point. In addition, our algorithm currently uses a fixed increase for the adaptation. For future work, we plan to determine not only when to adapt the tool but also how much to adapt it based on the user's performance.

CONCLUSION

We showed that automatically adaptive tools that vary task difficulty based on a learner's performance, can indeed help in motor skill training. Using our study prototype and the adaptation approach of the optimal challenge point, we demonstrated that training in an auto-adaptive condition leads to higher learning gains when compared to training in a static or manually adaptive condition. We showed that the experience of training in the auto-adaptive condition is also more enjoyable for learners since it removes the decision making process around which difficulty level to train on and provides feedback to the learner in the form of the shape-adaptation of the tool.

For future research, we plan to build a toolkit that helps designers build their own adaptive training tools.

ACKNOWLEDGMENTS

This work is supported by MIT Learning Initiative and the National Science Foundation (Grant No. 1844406).